Data visualization

Aims

In writing this chapter, I have two aims.

- The first aim for this chapter is to expose students to an outline summary of some key ideas and techniques for data visualization in psychological science.

There is an extensive experimental and theoretical literature concerning data visualization, what choices we can or should make, and how these choices have more or less impact, in different circumstances or for different audiences. Here, we can only give you a flavour of the on-going discussion. If you are interested, you can follow-up the references in the cited articles. But, using this chapter, I hope that you will gain a sense of the reasons how or why we may choose to do different things when we produce visualizations.

- The second aim is to provide materials, and to show visualizations, to raise an awareness of what results come from making different choices. This is because we hope to encourage students to make choices based on reasons and it is hard to know what choices count without first seeing what the results might look like.

In my experience, knowing that there are choices is the first step. In proprietary software packages like Excel and SPSS there are plenty of choices but these are limited by the menu systems to certain combinations of elements. Here, in using R to produce visualizations, there is much more freedom, and much more capacity to control what a plot shows and how it looks, but knowing where to start has to begin with seeing examples of what some of the choices result in.

At the end of the chapter, I highlight some resources you can use in independent learning for further development, see Section 1.9.

So, we are aiming to (1.) start to build insight into the choices we make and (2.) provide resources to enable making those choices in data visualization.

Why data visualization matters

Data visualization is important. Building skills in visualization matters to you because, even if you do not go on to professional work in which you produce visualizations you will certainly be working in fields in which you need to work with, or read or evaluate, visualizations.

You have already been doing this: our cultural or visual environment is awash in visualizations, from weather maps to charts on the television news. It will empower you if you know a bit about how or why these visualizations are produced in the ways that they are produced. That is a complex development trajectory but we can get started here.

In the context of the research report exercise, see ?@sec-pipeline, I mention data visualization in relation to stages of the data analysis pipeline or workflow. But the reality is that, most of the time, visualization is useful and used at every stage of data analysis workflow.

Three kinds of honesty

I write this chapter with three kinds of honesty in mind.

- I will expose some of the process involved in thinking about and preparing for the production of plots.

- I can assure you that when a professional data analysis worker produces plots in R they will be looking for information about what to do, and how to do it, online. I will provide links to the information I used, when I wrote this chapter, in order to figure out the coding to produce the plots.

- I won’t pretend that I got the plots “right first time” or that I know all the coding steps by memory. Neither is true for me and they would not be true for most professionals if they were to write a chapter like this. Looking things up online is something we all do so showing you where the information can be found will help you grow your skills.

- I will show how we often prepare for the production of plots by processing the data that we must use to inform the plots.

- We almost always have to process the data we collected or gathered together from our exerimental work or our observations.

- In this chapter, some of the coding steps I will outline are done in advance of producing a plot, to give the plotting code something to work with.

- Knowing about these processing steps will ensure you have more flexibility or power in getting your plots ready.

- I am going to expose variation, as often as I can, in observations.

- We typically collect data about or from people, about their responses to things we may present (stimuli) or, given tasks, under different conditions, or concerning individual differences on an array of dimensions.

- Sources of variation will be everywhere in our data, even though we often work with statistical analyses (like the t-test) that focus our attention on the average participant or the average response.

- Modern analysis methods (like mixed-effects models) enable us to account for sources of variation systematically, so it is good to begin thinking about, say, how people vary in their response to different experimental conditions from early in your development.

Our approach: tidyverse

The approach we will take is to focus on step-by-step guides to coding. I will show plots and I will walk through the coding steps, explaining my reasons for the choices I make.

We will be working with plotting functions like ggplot() provided in libraries like ggplot2 [@R-ggplot2] which is part of the tidyverse [@R-tidyverse] collection of libraries.

You can access information about the tidyverse collection here.

Grammar of graphics

The gg in ggplot stands for the “Grammar of Graphics”, and the ideas motivating the development of the ggplot2 library of functions are grounded in the ideas concerning the grammar of graphics, set out in the book of that name [@wilkinson2013].

What is helpful to us, here, is the insight that the code elements (and how they result in visual elements) can be identified as building blocks, or layers, that we can add and adjust piece by piece when we are producing a visualization.

A plot represents information and, critically, every time we write ggplot code we must specify somewhere the ways that our plot links data to something we see. In terms of ggplot, we specify aesthetic mappings using the aes() code to tell R what variables should be mapped e.g. to x-axis or y-axis location, to colour, or to group assignments. We then add elements to instruct R how to represent the aesthetic mappings as visual objects or attributes: geometric objects like a scatter of points geom_point() or a collection of bars geom_bar(); or visual features like colour, shape or size e.g. aes(colour = group). We can add visual elements in a series of layers, as shall see in the practical demonstrations of plot construction. We can adjust how scaling works. And we can add annotation, labels, and other elements to guide and inform the attention of the audience.

You can read more about mastering the grammar here.

Pipes

We know that (some of) you want to see more use of pipes (represented as %>% or |>) in coding. There will be plenty of pipes in this chapter.

In using pipes in the code, I am structuring the code so that it works — and is presented — in a sequence of steps. There are different ways to write code but I find this way easier to work with and to read and I think you will too.

Let’s take a small example:

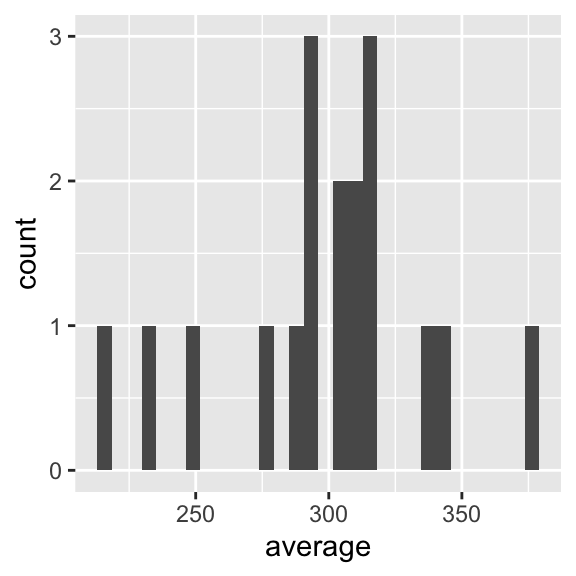

sleepstudy %>%

group_by(Subject) %>%

summarise(average = mean(Reaction)) %>%

ggplot(aes(x = average)) +

geom_histogram()

Here, we work through a series of steps:

sleepstudy %>%we first tell R we want to work with the dataset calledsleepstudyand the%>%pipe symbol at the end of the line tells R that we want it to pass that dataset on to the next step for what happens next.group_by(Subject) %>%tells R that we want it to do something, here, group the rows of data according to theSubject(participant identity) coding variable, and pass the grouped data on to the next step for what happens following.summarise(average = mean(Reaction)) %>%tells R to take the grouped variable and calculate a summary, the mean Reaction score, for each group of observations for each participant. The%>%pipe at the end of the line tells R to pass the summary dataset of mean Reaction scores on to the next process.ggplot(aes(x = average)) +tells R that we want it to take these summaryaverageReaction scores and make a plot out of them.geom_histogram()tells R that we want a histogram plot.

What you can see is that each line ending in a %> pipe passes something on to the next line. A following line takes the output of the process coded in the preceding line, and works with it.

Each step is executed in turn, in strict sequence. This means that if I delete line 3 summarise(average = mean(Reaction)) %>% then the following lines cannot work because the ggplot() function will be looking for a variable average that does not yet exist.

- You can see that in the data processing part of the code, successive steps in data processing end in a pipe

%>%. - In contrast, successive steps of the plotting code add

ggplotelements line by line with each line (except the last) ending in a+.

Notice that none of the processing steps actually changes the dataset called sleepstudy. The results of the process exist and can be used only within the sequence of steps that I have coded. If you want to keep the results of processing steps, you need to assign an object name to hold them, and I show how to do this, in the following.

You can read a clear explanation of pipes here.

You can use the code you see:

- Each chunk of code is highlighted in the chapter.

- If you hover a cursor over the highlighted code a little clipboard symbol appears in the top right of the code chunk.

- Click on the clipboard symbol to copy the code, paste it into your own R-Studio instance.

- Then experiment: try out things like removing or commmenting out lines, or changing lines, to see what effect that has.

- Breaking things, or changing things, helps to show what each bit of code does.

Key ideas

Data visualization is not really about coding, as about thinking.

- What are our goals?

- Why do we make some choices instead of others?

Goals

@gelman2013 outline the goals we may contemplate when we produce or evaluate visual data displays. In general, they argue, we are doing one or both of two things.

- Discovery

- Communication

In practice, this may involve the following (I paraphrase them, here).

- Discovery goals

- Getting a sense of what is in a dataset, checking assumptions, confirming expectations, and looking for distinct patterns.

- Making sense of the scale and complexity of the dataset.

- Exploring the data to reveal unexpected aspects. As we will see, using small multiples (grids of plots) can often help with this.

- Communication goals

- We communicate about our data to ourselves and to others. The process of constructing and evaluating a plot is often one way we speak to ourselves about own data, developing an understanding of what we have got. Once we have done this for ourselves, we can better figure out how to do it to benefit the understanding of an audience.

- We often use a plot to tell a story: the story of our study, our data, or our insight and how we get to it.

- We can use visualizations to attract attention and stimulate interest. Often, in presenting data to an audience through a talk or a report we need to use effective visualizations to ensure we get attention and that we locate the attention of our audience in the right places.

Psychological science of data visualization

You will see a rich variety of data visualizations in media and in the research literature. You will know that some choices, in the production of those visualizations, appear to work better than others.

Some of the reasons why some choices work better will relate to what we can understand in terms of the psychological science of how visual data communication works. A useful recent review of relevant research is presented by @franconeri2021.

@franconeri2021 provide a reason for working on visualizations: they allow us humans to process an array of information at once, often faster than if we were reading about the information, bit by bit. Effective visualization, then, is about harnessing the power of the human visual system, or visual cognition, for quick, efficient, information processing. Critically for science, in addition, visualizations can be more effective for discovering or communicating the critical features of data than summary statistics, as we shall see.

In producing visualizations, we often work with a vocabulary or palette of objects or visual elements. @franconeri2021 discuss how visualizations rely on visual channels to transform numbers into images that we can process visually.

- Dot plots and scatterplots represent values as position.

- Bar graphs represent values as position (the heights of the tops of bars) but also as lengths.

- Angles are presented when we connect points to form a line, allowing us to encode the differences between points.

- Intensity can be presented through variation in luminance contrast or colour saturation.

These channels can be ordered by how precisely they have been found to communicate different numeric values to the viewer. Your audience may more accurately perceive the difference between two quantities if you communicate that difference through the difference in the location of two points than if you ask your audience to compare the angles of two lines or the intensity of two colour spots.

In constructing data visualizations, we often work with conventions, established through common practice in a research tradition. For example, if you are producing a scatterplot, then most of the time your audience will expect to see the outcome (or dependent variable) represented by the vertical height (on the y-axis) of points. And your audience will expect that higher points represent larger quantities of the y-axis variable.

In constructing visualizations, we need to be aware of the cognitive work that we require the audience to do. Comparisons are harder, requiring more processing and imposing more load on working memory. You can help your reader by guiding their attention, by grouping or ordering visual elements to identify the most important comparisons. We can vary colour and shape to group or distinguish visual elements. We can add annotation or elements like lines or arrows to guide attention.

Visualizations are presented in context, whether in presentations or in reports. This context should be provided, by you the producer, with the intention to support the communication of your key messages. A visual representation, a plot, will be presented with a title, maybe a title note, maybe with annotation in the plot, and maybe with accompanying text. You should use these textual elements to lead your audience, to help them make sense of what they are looking at.

The diversity of audiences means that we should habitually add alt text for data visualizations to help those who use screen readers by providing a summary description of what images show. This chapter has been written using Quarto and rendered to .html with alt text included along with all images. Please do let me know if you are using a screen reader and the alt text description is or is not so helpful.

You can read a helpful explanation of alt text here.

If you use colour in images then we should use colour bind colour palettes.

You can read about using colour blind palettes here or here.

In the following practical exercises, we work with many of the insights in our construction of visualizations.

A quick start

We can get started before we understand in depth the key ideas or the coding steps. This will help to show where we are going. We will work with the sleepstudy dataset.

I will model the process, to give you an example workflow:

- the data, where they come from — what we can find out;

- how we approach the data — what we expect to see;

- how we visualize the data — discovery, communication.

Sleepstudy data

When we work with R, we usually work with functions like ggplot() provided in libraries like ggplot2 [@R-ggplot2]. These libraries typically provide not only functions but also datasets that we can use for demonstration and learning.

The lme4 library [@R-lme4] provides the sleepstudy dataset and we will take a look at these data to offer a taste of what we can learn to do. Usually, information about the R libraries we use will be located on the Comprehensive R Archive Network (CRAN) web pages, and we can find the technical reference information for lme4 in the CRAN reference manual for the library, where we see that the sleepstudy data are from a study reported by [@belenky2003]. The manual says that the sleepstudy dataset comprises:

A data frame with 180 observations on the following 3 variables. [1.] Reaction – Average reaction time (ms) [2.] Days – Number of days of sleep deprivation [3.] Subject – Subject number on which the observation was made.

We can take a look at the first few rows of the dataset.

sleepstudy %>%

head(n = 4) Reaction Days Subject

1 249.5600 0 308

2 258.7047 1 308

3 250.8006 2 308

4 321.4398 3 308What we are looking at are:

The average reaction time per day (in milliseconds) for subjects in a sleep deprivation study. Days 0-1 were adaptation and training (T1/T2), day 2 was baseline (B); sleep deprivation started after day 2.

The abstract for @belenky2003 tells us that participants were deprived of sleep and the impact of relative deprivation was tested using a cognitive vigilance task for which the reaction times of responses were recorded.

So, we can expect to find:

- A set of rows corresponding to multiple observations for each participant (

Subject) - A reaction time value for each participant (

Reaction) - Recorded on each

Day

Discovery and communication

In data analysis work, we often begin with the objective to understand the structure or the nature of the data we are working with.

You can call this the discovery phase:

- what have we got?

- does it match our expectations?

If these are reaction time data (collected in an cognitive experiment) do they look like cognitive reaction time data should look? We would expect to see a skewed distribution of observed reaction times distributed around an average located somewhere in the range 200-700ms.

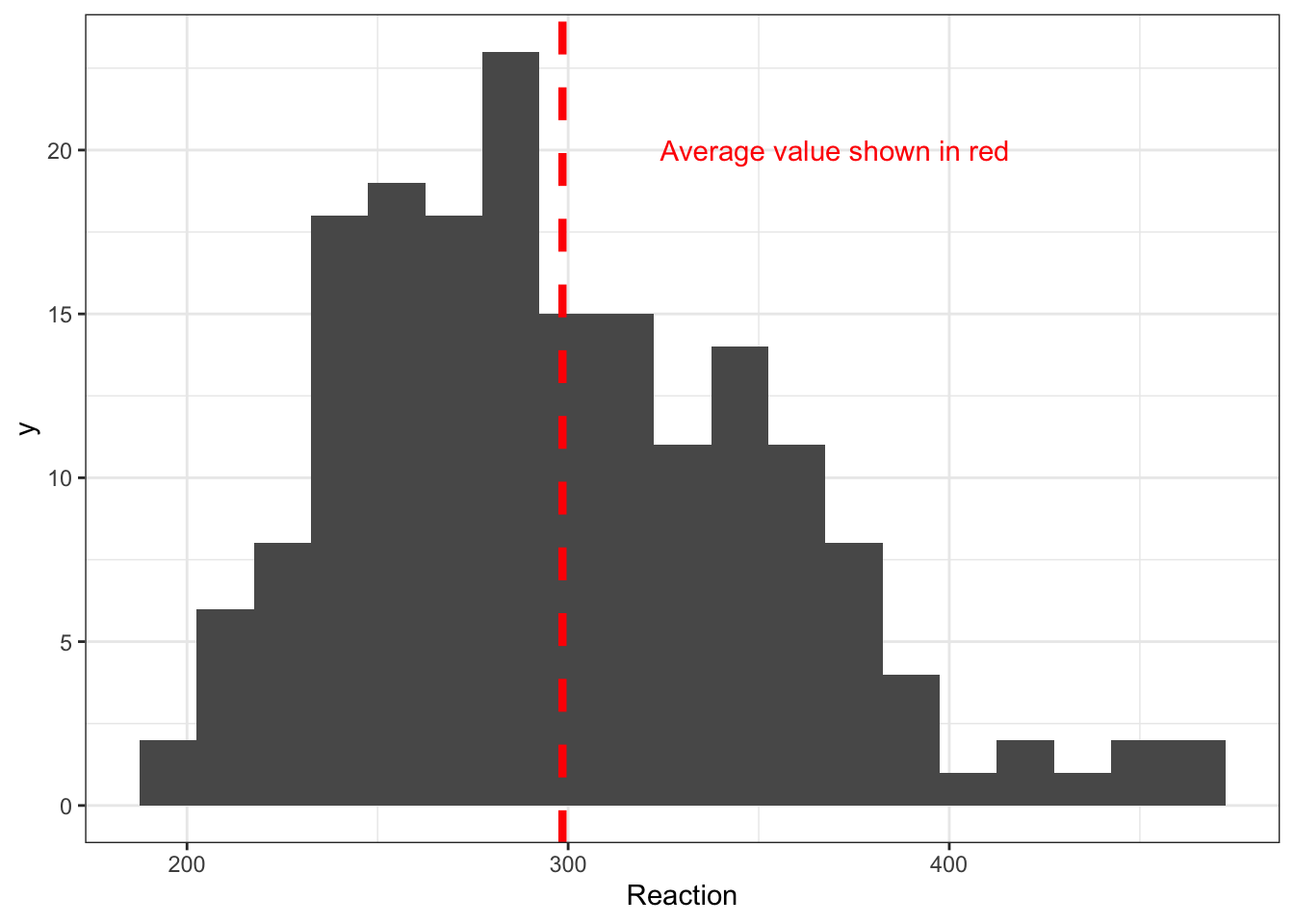

Figure 2 represents the distribution of reaction times in the sleepstudy dataset.

I provide notes on the code steps that result in the plot. Click on the Notes tab to see them. Later, I will discuss some of these elements.

sleepstudy %>%

ggplot(aes(x = Reaction)) +

geom_histogram(binwidth = 15) +

geom_vline(xintercept = mean(sleepstudy$Reaction),

colour = "red", linetype = 'dashed', size = 1.5) +

annotate("text", x = 370, y =20,

colour = "red",

label = "Average value shown in red") +

theme_bw()Warning: Using `size` aesthetic for lines was deprecated in ggplot2 3.4.0.

ℹ Please use `linewidth` instead.

sleepstudy reaction time data

The plotting code pipes the data into the plotting code steps to produce the plot. You can see some elements that will be familiar to you and some new elements.

sleepstudy %>%

ggplot(aes(x = Reaction)) +

geom_histogram(binwidth = 15) +

geom_vline(xintercept = mean(sleepstudy$Reaction),

colour = "red", linetype = 'dashed', size = 1.5) +

annotate("text", x = 370, y =20,

colour = "red",

label = "Average value shown in red") +

theme_bw()Let’s go through the code step-by-step:

sleepstudy %>%asks R to take thesleepstudydataset and%>%pipe it to the next steps for processing.ggplot(aes(x = Reaction)) +takes thesleepstudydata and asks R to use theggplot()function to produce a plot.aes(x = Reaction)tells R that in the plot we want it to map theReactionvariable values to locations on the x-axis: this is the aesthetic mapping.geom_histogram(binwidth = 15) +tells R to produce a histogram then add a step.geom_vline(...) +tells R we want to draw vertical line.xintercept = mean(sleepstudy$Reaction), ...tells R to draw the vertical line at the mean value of the variableReactionin thesleepstudydataset.colour = "red", linetype = 'dashed', size = 1.5tells R we want the vertical line to be red, dashed and 1.5 times the usual size.annotate("text", ...)tells R we want to add a text note.x = 370, y =20, ...tells R we want the note added at the x,y coordinates given.colour = "red", ..;and we want the text in red....label = "Average value shown in red") +tells R we want the text note to say that this is where the average is.theme_bw()lastly, we change the theme.

Figure 2 shows a distribution of reaction times, ranging from about 200ms to 500ms. The distribution has a peak around 300ms. The location of the mean is shown with a dashed red line. The distribution includes a long tail of longer times. This is pretty much what we would expect to see.

We may wish to communicate the information we gain through using this histogram, in a presentation or in a report.

Discovery and communication

Let us imagine that it is our study. (Here, we shall not concern ourselves too much — with apologies — with understanding what the original study authors actually did.)

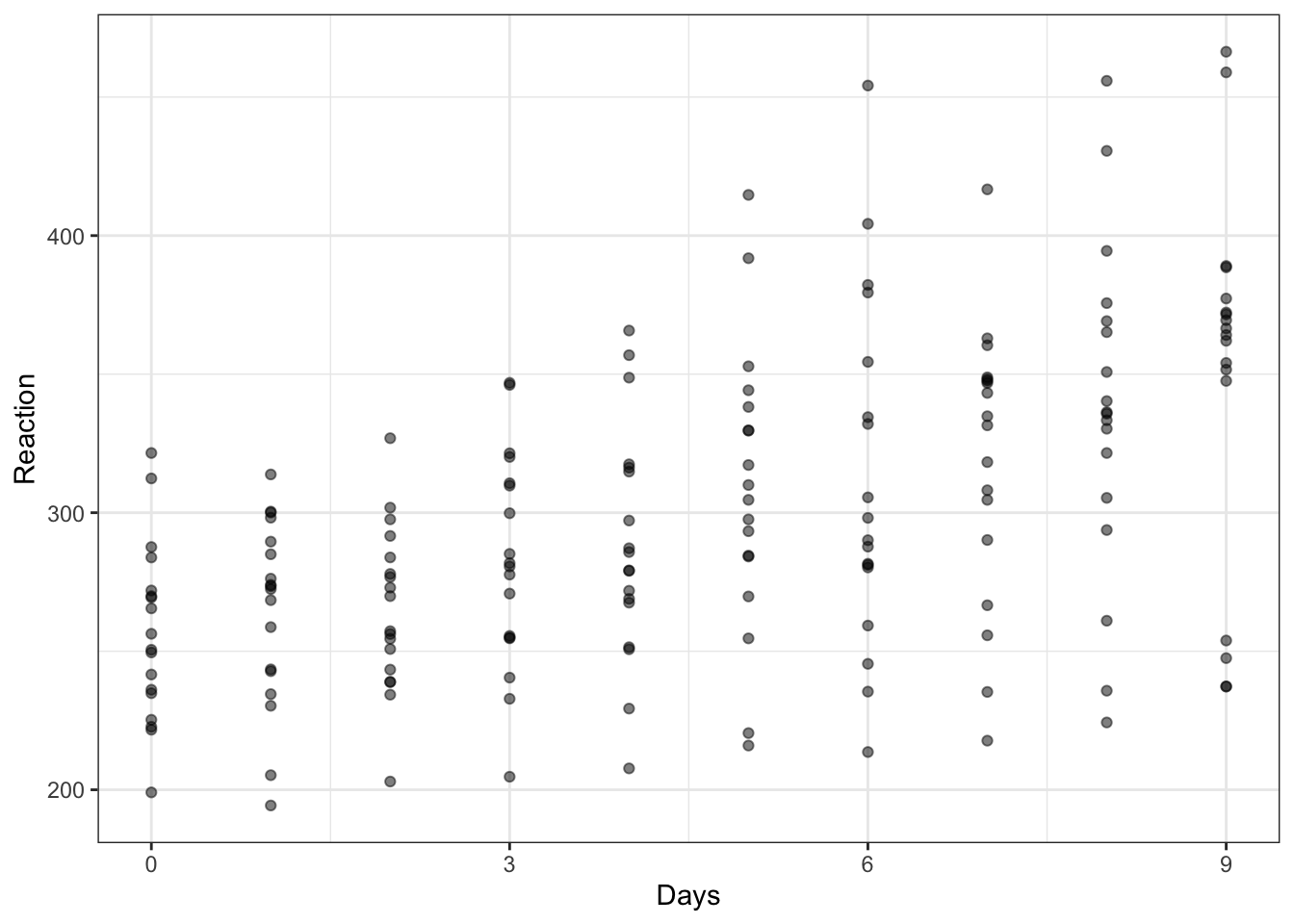

If we are looking at the impact of sleep deprivation on cognitive performance, we might predict that reaction times got longer (responses slowed) as the study progressed. Is that what we see?

To examine the association between two variables, we often use scatterplots. Figure 3 is a scatterplot indicating the possible association between reaction time and days in the sleepstudy data. Points are ordered on x-axis from 0 to 9 days, on y-axis from 200 to 500 ms reaction time.

I provide notes on the code steps that result in the plot. Click on the Notes tab to see them. Later, I will discuss some of these elements.

sleepstudy %>%

ggplot(aes(x = Days, y = Reaction)) +

geom_point(size = 1.5, alpha = .5) +

scale_x_continuous(breaks = c(0, 3, 6, 9)) +

theme_bw()

sleepstudy data

Notice the numbered steps in producing this plot.

- Name the dataset: the dataset is called

sleepstudyin thelme4library which makes it available therefore we use this name to specify it. sleepstudy %>%uses the%>%pipe operator to pass this dataset toggplot()to work with, in creating the plot. Becauseggplot()now knows about thesleepstudydata, we can next specify what aesthetic mappings we need to use.ggplot(aes(x = Days, y = Reaction)) +tells R that we want to mapDaysinformation to x-axis position andReaction(response time) information to y-axis position.geom_point() +tells R that we want to locate points – creating a scatterplot – at the paired x-axis and y-xis coordinates.scale_x_continuous(breaks = c(0, 3, 6, 9)) +is new: we tell R that we want the x-axis tick labels – the numbers R shows as labels on the x-axis – at the values 0, 3, 6, 9 only.theme_bw()requires R to make the plot background white and the foreground plot elements black.

You can find more information on scale_ functions in the ggplot2 reference information.

https://ggplot2.tidyverse.org/reference/scale_continuous.html

The plot suggests that reaction time increases with increasing number of days.

In producing this plot, we are both (1.) engaged in discovery and, potentially, (2.) able to do communication.

- Discovery: is the relation between variables what we should expect, given our assumptions?

- Communication: to ourselves and others, what relation do we observe, given our sample?

At this time, we have used and discussed scatterplots before, why we use them, how we write code to produce them, and how we read them.

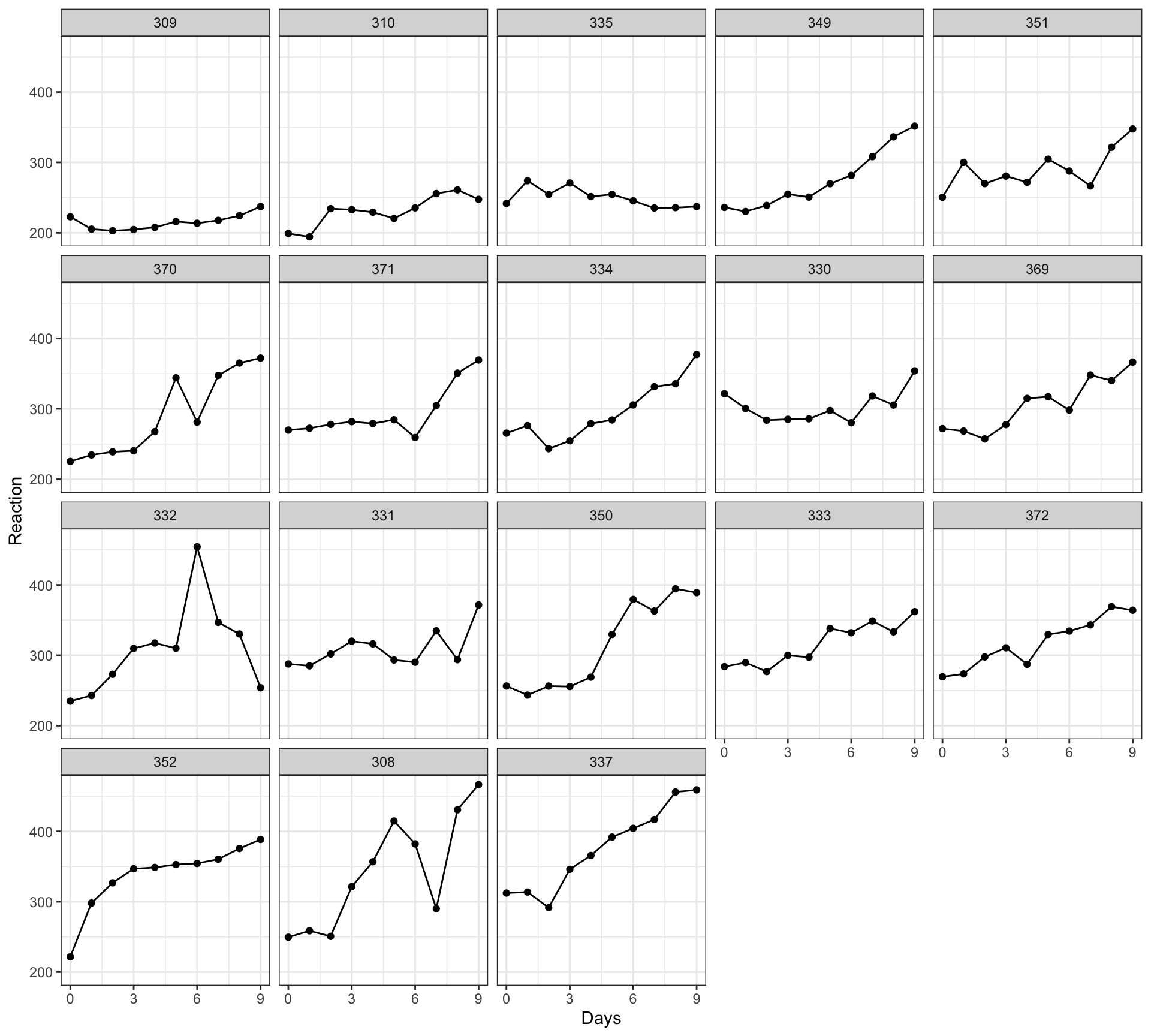

With two additional steps we can significantly increase the power of the visualization. Figure 4 is a grid of scatterplots indicating the possible association between reaction time and days separately for each participant.

Again, I hide an explanation of the coding steps in the Notes tab: the interested reader can click on the tab to view the step-by-step guide to what is happening.

sleepstudy %>%

group_by(Subject) %>%

mutate(average = mean(Reaction)) %>%

ungroup() %>%

mutate(Subject = fct_reorder(Subject, average)) %>%

ggplot(aes(x = Days, y = Reaction)) +

geom_point() +

geom_line() +

scale_x_continuous(breaks = c(0, 3, 6, 9)) +

facet_wrap(~ Subject) +

theme_bw()

Notice the numbered steps in producing this plot.

You can see that the block of code combines data processing and data plotting steps. Let’s look at the data processing steps then the plotting steps in order.

First: why are we doing this? My aim is to produce a plot in which I show the association between Days and Reaction for each Subject individually. I suspect that the association between Days and Reaction may be stronger – so the trend will be steeper – for participants who are slower overall. I suspect this because, given experience, I know that slower, less accurate, participants tend to show larger effects.

So: in order to get a grid of plots, one plot for each Subject, in order of the average Reaction for each individual Subject, I need to first calculate the average Reaction then order the dataset rows by those averages. I do that in steps, using pipes to feed information from one step to the next step, as follows.

sleepstudy %>%tells R what data I want to use, and pipe it to the next step.group_by(Subject)tells R I want it to work with data (rows) grouped bySubjectidentity code,%>%piping the grouped form of the data forward to the next stepmutate(average = mean(Reaction))usesmutate()to create a new variableaveragewhich I calculate as themean()ofReaction, piping the data with this additional variable%>%forward to the next step.ungroup() %>%tells R I want it to go back to working with the data in rows not grouped rows, and pipe the now ungrouped form of the data to the next step.mutate(Subject = fct_reorder(Subject, average))tells R I want it to sort the rows of the wholesleepstudydataset in order, moving groups of rows identified bySubjectso that data forSubjectcodes associated with faster times are located near the top of the dataset.

These data, ordered by Subject by the average Reaction for each participant, are then %>% piped to ggplot to create a plot.

ggplot(aes(x = Days, y = Reaction)) +specifies the aesthetic mappings, as before.geom_point() +asks R to locate points at the x-axis, y-axis coordinates, creating a scatterplot, as before.geom_line() +is new: I want R to connect the points, showing the trend in the association betweenDaysandReactionfor each person.scale_x_continuous(breaks = c(0, 3, 6, 9)) +fixes the x-axis labels, as before.facet_wrap(~ Subject) +is the big new step: I ask R to plot a separate scatterplot for the data for each individualSubject.

You can see more information about facetting here:

https://ggplot2.tidyverse.org/reference/facet_wrap.html

In short, with the facet_wrap(~ .) function, we are asking R to subset the data by a grouping variable, specified (~ .) by replacing the dot with the name of the variable.

Notice that I use %>% pipes to move the data processing forward, step by step. But I use + to add plot elements, layer by layer.

Figure Figure 4 is a grid or lattice of scatterplots revealing how the possible association between reaction time and days varies quite substantially between the participants in the sleepstudy data. Most plots indicate that reaction time increases with increasing number of days. However, different participants show this trend to differing extents.

What are the two additions I made to the conventional scatterplot code?

- I calculated the average reaction time per participant, and I ordered the data by those averages.

- I facetted the plots, breaking them out into separate scatterplots per participant.

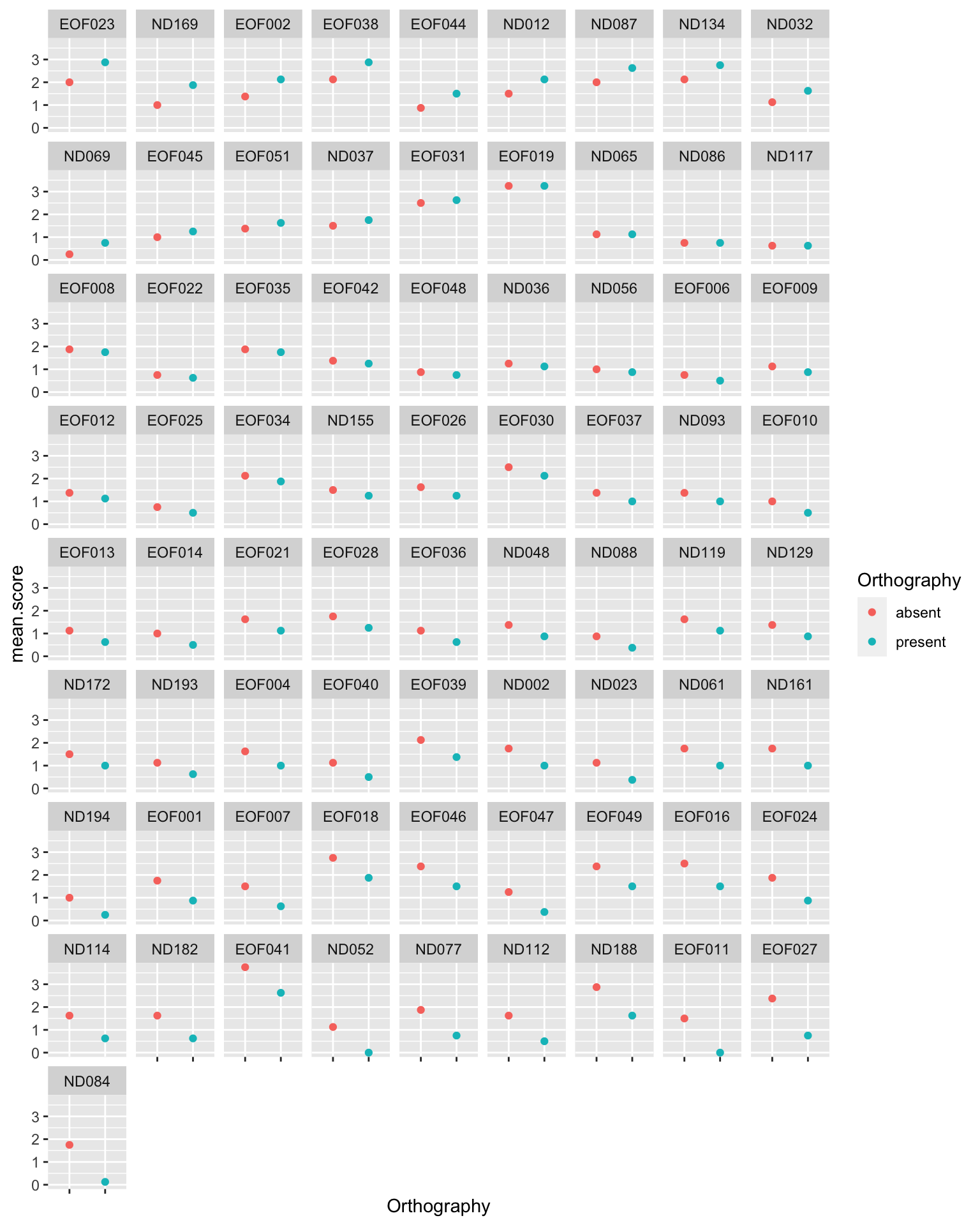

Why would you do this? Variation between people or groups, in effects or in average outcomes, are often to be found in psychological data [@vasishth2021]. The variation between people that we see in these data — in the average response reaction time, and in how days affects times — would motivate the use of linear mixed-effects models to analyze the way that sleep patterns affect responses in the sleep study [@Pinheiro2000a].

The data processing and plotting functions in the tidyverse collection of libraries enable us to discover and to communicate variation in behaviours that should strengthen our and others’ scientific understanding.

Summary: Quick start lessons

What we have seen, so far, is that we can make dramatic changes to the appearance of visualizations (e.g., through faceting) and also that we can exert fine control over the details (e.g., adjusting scale labels). What we need to stop and consider are what we want to do (and why), in what order.

We have seen how we can feed a data process into a plot to first prepare then produce the plot in a sequence of steps. In processing the data, we can take some original data and extract or calculate information that we can use for our plotting e.g. calculating the mean of a distribution in order to then highlight where that mean is located.

We have also seen the use of plots, and the editing of their appearance, to represent information visually. We can verbalize the thought process behind the production of these plots through a series of questions.

- Are we looking at the distribution of one variable (if yes: consider a histogram) or are we comparing the distributions of two or more variables (if yes: consider a scatterplot)?

- Is there a salient feature of the plot we want to draw the attention of the audience to? We can add a visual element (like a line) and annotation text to guide the audience.

- Are we interested in variation between sub-sets of the data? We can facet the plot to examine variation between sub-sets (facets) enabling the comparison of trends.

A practical guide to visualization ideas

In this guide, we illustrate some of the ideas about visualization we discussed at the start, working with practical coding examples. We will be working with real data from a published research project. We are going to focus the practical coding examples on the data collected for the analysis reported by @ricketts2021.

We will focus on working with the data from one of the tasks, in one of the studies reported by @ricketts2021.

- This means that you can consolidate your learning by applying the same code moves to data from the other task in the same study, or to data from the other study.

- In applying code to other data, you will need to be aware of differences in, say, the way that some things like the outcome response variable are coded.

You can then further extend your development by trying out the coding moves for yourself using the data collected by @rodríguez-ferreiro2020.

- These data are from a quite distinct kind of investigation, on a different research topic than the topic we will be exploring through our working examples.

- However, some aspects of the data structure are similar.

- Critically, the data are provided with comprehensive documentation.

Set up for coding

To do our practical work, we will need functions and data. We get these at the start of our workflow.

Get libraries

We are going to need the lme4, patchwork, psych and tidyverse libraries of functions and data.

library(ggeffects)

library(patchwork)

library(psych)

library(tidyverse)Get the data

You can access the data we are going to use in two different ways.

Get the data from project repositories

The data associated with both [@ricketts2021] and [@rodríguez-ferreiro2020] are freely available through project repositories on the Open Science Framework web pages.

You can get the data from the @ricketts2021 paper through the repository located here.

You can get the data from the @rodríguez-ferreiro2020 paper through the repository located here.

These data are associated with full explanations of data collection methods, materials, data processing and data analysis code. You can review the papers and the repository material guides for further information.

In the following, I am going to abstract summary information about the @ricketts2021 study and data. I shall leave you to do the same for the @rodríguez-ferreiro2020 study.

Get the data through a downloadable archive

Download the data-visualization.zip files folder and upload the files to RStudio Server.

The folder includes the @ricketts2021 data files:

concurrent.orth_2020-08-11.csvconcurrent.sem_2020-08-11.csvlong.orth_2020-08-11.csvlong.sem_2020-08-11.csv

The folder also includes the @rodríguez-ferreiro2020 data files:

PrimDir-111019_English.csvPrimInd-111019_English.csv

- These data files are collected together in a folder for download, for your convenience, but the version of record for the data for each study comprise the files located on the OSF repositories associated with the original articles.

Information about the Ricketts study and the datasets

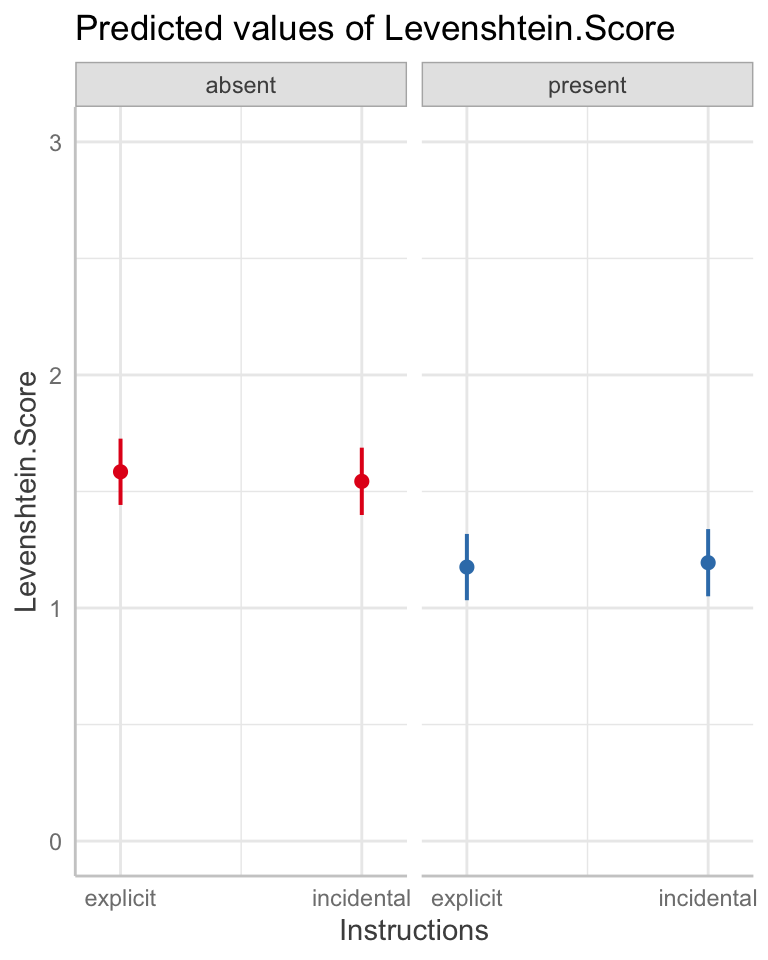

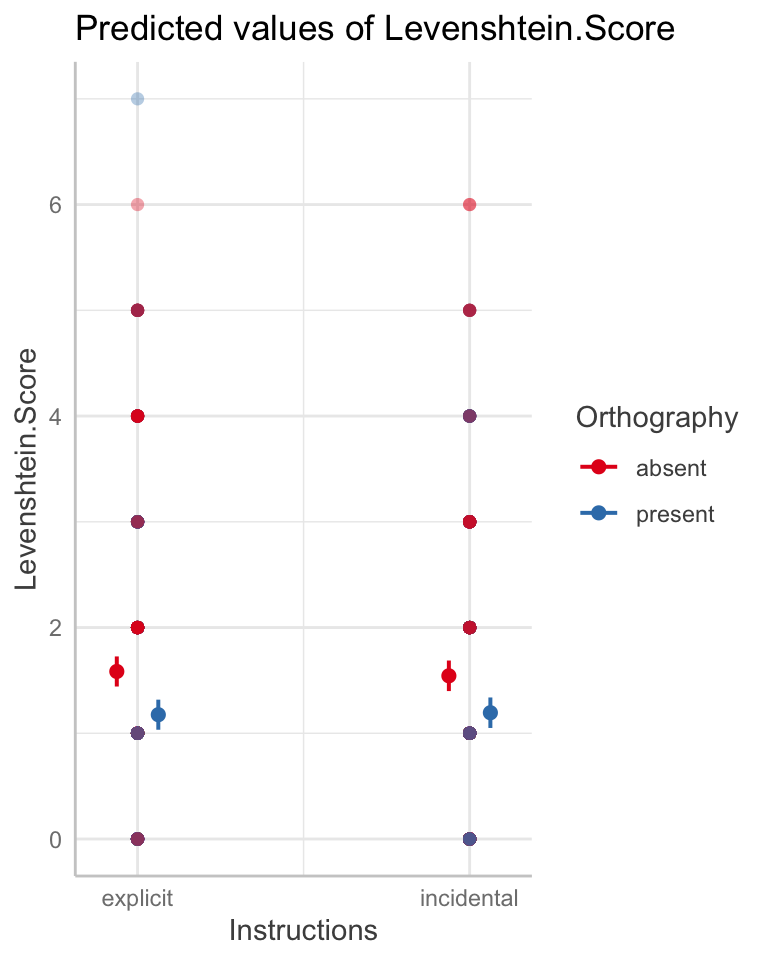

@ricketts2021 conducted an investigation of word learning in school-aged children. They taught children 16 novel words in a study with a 2 x 2 factorial design. In this investigation, they tested whether word learning is helped by presenting targets for word learning with their spellings, and whether learning is helped by telling children that they would benefit from the presence of those spellings.

The presence of orthography (the word spelling) was manipulated within participants (orthography absent vs. orthography present): for all children, eight of the words were taught with orthography present and eight with orthography absent. Instructions (incidental vs. explicit) were manipulated between participants such that children in the explicit condition were alerted to the presence of orthography whereas children in the incidental condition were not.

A pre-test was conducted to establish participants’ knowledge of the stimuli. Then, each child was seen for three 45-minute sessions to complete training (Sessions 1 and 2) and post-tests (Session 3). @ricketts2021 completed two studies: Study 1 and Study 2. All children, in both studies 1 and 2 completed the Session 3 post-tests.

In Study 1, longitudinal post-test data were collected because children were tested at two time points. Children were administered post-tests in Session 3, as noted: Time 1. Post-tests were then re-administered approximately eight months later at Time 2 (\(M = 241.58\) days from Session 3, \(SD = 6.10\)). In Study 2, the Study 1 sample was combined with an older sample of children. The additional Study 2 children were not tested at Time 2, and the analysis of Study 2 data did not incorporate test time as a factor.

The outcome data for both studies consisted of performance on post-tests.

The semantic post-test assessed knowledge for the meanings of newly trained words using a dynamic or sequential testing approach. I will not explain this approach in more detail, here, because the practical visualization exercises focus on the orthographic knowledge (spelling knowledge) post-test, explained next.

The orthographic post-test was included to ascertain the extent of orthographic knowledge after training. Children were asked to spell each word to dictation and spelling productions were transcribed for scoring. Responses were scored using a Levenshtein distance measure indexing the number of letter deletions, insertions and substitutions that distinguish between the target and child’s response. The maximum score is 0, with higher scores indicating less accurate responses.

For the Study 1 analysis, the files are:

long.orth_2020-08-11.csvlong.sem_2020-08-11.csv

Where long indicates the longitudinal nature of the data-set.

For the Study 2 analysis, the files are:

concurrent.orth_2020-08-11.csvconcurrent.sem_2020-08-11.csv

Where concurrent indicates the inclusion of concurrent (younger and older) child participant samples.

Each column in each data-set corresponds to a variable and each row corresponds to an observation (i.e., the data are tidy). Because the design of the study involves the collection of repeated observations, the data can be understood to be in a long format.

Each child was asked to respond to 16 words and, for each of the 16 words, we collected post-test responses from multiple children. All words were presented to all children.

We explain what you will find when you inspect the .csv files, next.

Data – variables and value coding

The variables included in .csv files are listed, following, with information about value coding or calculation.

Participant— Participant identity codes were used to anonymize participation. Children included in studies 1 and 2 – participants in the longitudinal data collection – were coded “EOF[number]”. Children included in Study 2 only (i.e., the older, additional, sample) were coded “ND[number]”.Time— Test time was coded 1 (time 1) or 2 (time 2). For the Study 1 longitudinal data, it can be seen that each participant identity code is associated with observations taken at test times 1 and 2.Study— Observations taken for children included in studies 1 and 2 – participants in the longitudinal data collection – were coded “Study1&2”. Children included in Study 2 only (i.e., the older, additional, sample) were coded “Study2”.Instructions— Variable coding for whether participants undertook training in the explicit or incidental conditions.Version— Experiment administration codingWord— Letter string values show the words presented as stimuli to children.Consistency_H— Calculated orthography-to-phonology consistency value for each word.Orthography— Variable coding for whether participants had seen a word in training in the orthography absent or present conditions.Measure— Variable coding for the post-test measure: Sem_all if the semantic post-test; Orth_sp if the orthographic post-test.Score— Variable coding for response category.

For the semantic (sequential or dynamic) post-test, responses were scored as corresponding to:

- 3 – correct response in the definition task

- 2 – correct response in the cued definition task

- 1 – correct response in the recognition task

- 0 – if the item wasn’t correctly defined or recognised

For the orthographic post-test, responses were scored as:

- 1 – correct, if the target spelling was produced in full

- 0 – incorrect

However, the analysis reported by @ricketts2021 focused on the more sensitive Levenshtein distance measure (see following).

WASImRS— Raw score – Matrix Reasoning subtest of the Wechsler Abbreviated Scale of IntelligenceTOWREsweRS— Raw score – Sight Word Efficiency (SWE) subtest of the Test of Word Reading Efficiency; number of words read correctly in 45 secondsTOWREpdeRS— Raw score – Phonemic Decoding Efficiency (PDE) subtest of the Test of Word Reading Efficiency; number of nonwords read correctly in 45 secondsCC2regRS— Raw score – Castles and Coltheart Test 2; number of regular words read correctlyCC2irregRS— Raw score – Castles and Coltheart Test 2; number of irregular words read correctlyCC2nwRS— Raw score – Castles and Coltheart Test 2; number of nonwords read correctlyWASIvRS— Raw score – vocabulary knowledge indexed by the Vocabulary subtest of the WASI-IIBPVSRS— Raw score – vocabulary knowledge indexed by the British Picture Vocabulary Scale – Third EditionSpelling.transcription— Transcription of the spelling response produced by children in the orthographic post-testLevenshtein.Score— Children were asked to spell each word to dictation and spelling productions were transcribed for scoring. Responses were scored using a Levenshtein distance measure indexing the number of letter deletions, insertions and substitutions that distinguish between the target and child’s response. For example, the response ‘epegram’ for target ‘epigram’ attracts a Levenshtein score of 1 (one substitution). Thus, this score gives credit for partially correct responses, as well as entirely correct responses. The maximum score is 0, with higher scores indicating less accurate responses.

(Notice that, for the sake of brevity, I do not list the z_ variables but these are explained in the study OSF repository materials.)

Levenshtein distance scores are higher if a child makes more errors in producing the letters in a spelling response.

- This means that if we want to see what factors help a child to learn a word, including its spelling, then we want to see that helpful factors are associated with lower Levenshtein scores.

To demonstrate some of the processes we can enact to process and visualize data, and some of the benefits of doing so, we are going to work with the concurrent.orth_2020-08-11.csv dataset. These are data corresponding to the @ricketts2021 Study 2. concurrent refers to the analysis (a concurrent comparison) of data from younger and older children.

Read the data into R

Assuming you have downloaded the data files, we first read the dataset into the R environment: concurrent.orth_2020-08-11.csv. We do the data read in a bit differently than you have seen it done before; we will come back to what is going on (in Section 1.7.4.1).

conc.orth <- read_csv("concurrent.orth_2020-08-11.csv",

col_types = cols(

Participant = col_factor(),

Time = col_factor(),

Study = col_factor(),

Instructions = col_factor(),

Version = col_factor(),

Word = col_factor(),

Orthography = col_factor(),

Measure = col_factor(),

Spelling.transcription = col_factor()

))We can inspect these data using summary().

summary(conc.orth) Participant Time Study Instructions Version

EOF001 : 16 1:1167 Study1&2:655 explicit :592 a:543

EOF002 : 16 Study2 :512 incidental:575 b:624

EOF004 : 16

EOF006 : 16

EOF007 : 16

EOF008 : 16

(Other):1071

Word Consistency_H Orthography Measure

Accolade : 73 Min. :0.9048 absent :583 Orth_sp:1167

Cataclysm : 73 1st Qu.:1.5043 present:584

Contrition: 73 Median :1.9142

Debacle : 73 Mean :2.3253

Dormancy : 73 3rd Qu.:3.0436

Epigram : 73 Max. :3.9681

(Other) :729

Score WASImRS TOWREsweRS TOWREpdeRS CC2regRS

Min. :0.0000 Min. : 5 Min. :51.00 Min. :19.00 Min. :28.00

1st Qu.:0.0000 1st Qu.:13 1st Qu.:69.00 1st Qu.:35.00 1st Qu.:36.00

Median :0.0000 Median :17 Median :74.00 Median :41.00 Median :38.00

Mean :0.2913 Mean :16 Mean :74.23 Mean :41.59 Mean :36.91

3rd Qu.:1.0000 3rd Qu.:19 3rd Qu.:80.00 3rd Qu.:50.00 3rd Qu.:39.00

Max. :1.0000 Max. :25 Max. :93.00 Max. :59.00 Max. :40.00

CC2irregRS CC2nwRS WASIvRS BPVSRS

Min. :17.00 Min. :13.00 Min. :16.00 Min. :103.0

1st Qu.:23.00 1st Qu.:29.00 1st Qu.:25.00 1st Qu.:119.0

Median :25.00 Median :33.00 Median :29.00 Median :133.0

Mean :25.24 Mean :32.01 Mean :29.12 Mean :130.9

3rd Qu.:27.00 3rd Qu.:37.00 3rd Qu.:33.00 3rd Qu.:142.0

Max. :35.00 Max. :40.00 Max. :39.00 Max. :158.0

Spelling.transcription Levenshtein.Score zTOWREsweRS zTOWREpdeRS

Epigram : 57 Min. :0.000 Min. :-2.67807 Min. :-2.33900

Platitude : 43 1st Qu.:0.000 1st Qu.:-0.60283 1st Qu.:-0.68243

Contrition: 42 Median :1.000 Median :-0.02638 Median :-0.06122

fracar : 39 Mean :1.374 Mean : 0.00000 Mean : 0.00000

Nonentity : 39 3rd Qu.:2.000 3rd Qu.: 0.66537 3rd Qu.: 0.87061

raconter : 35 Max. :7.000 Max. : 2.16415 Max. : 1.80243

(Other) :912

zCC2regRS zCC2irregRS zCC2nwRS zWASIvRS

Min. :-3.3636 Min. :-2.22727 Min. :-3.1053 Min. :-2.63031

1st Qu.:-0.3435 1st Qu.:-0.60461 1st Qu.:-0.4920 1st Qu.:-0.82633

Median : 0.4115 Median :-0.06373 Median : 0.1614 Median :-0.02456

Mean : 0.0000 Mean : 0.00000 Mean : 0.0000 Mean : 0.00000

3rd Qu.: 0.7890 3rd Qu.: 0.47716 3rd Qu.: 0.8147 3rd Qu.: 0.77721

Max. : 1.1665 Max. : 2.64070 Max. : 1.3047 Max. : 1.97986

zBPVSRS mean_z_vocab mean_z_read zConsistency_H

Min. :-1.9946 Min. :-2.06910 Min. :-2.39045 Min. :-1.4153

1st Qu.:-0.8495 1st Qu.:-0.85941 1st Qu.:-0.43321 1st Qu.:-0.8181

Median : 0.1525 Median :-0.01483 Median : 0.08829 Median :-0.4096

Mean : 0.0000 Mean : 0.00000 Mean : 0.00000 Mean : 0.0000

3rd Qu.: 0.7967 3rd Qu.: 0.72964 3rd Qu.: 0.68438 3rd Qu.: 0.7157

Max. : 1.9418 Max. : 1.96083 Max. : 1.52690 Max. : 1.6368

You should notice one key bit of information in the summary. Focus on the summary for what is in the Participant column. You can see that we have a number of participants in this dataset, listed by Participant identity code in the summary() view e.g. EOF001. For each participant, we have 16 rows of data.

When we ask R for a summary of a nominal variable or factor it will show us the levels of each factor (i.e., each category or class of objects encoded by the categorical variable), and a count for the number of observations for each level.

Take a look at the rows of data for EOF001.

| Participant | Time | Study | Instructions | Version | Word | Consistency_H | Orthography | Measure | Score | WASImRS | TOWREsweRS | TOWREpdeRS | CC2regRS | CC2irregRS | CC2nwRS | WASIvRS | BPVSRS | Spelling.transcription | Levenshtein.Score | zTOWREsweRS | zTOWREpdeRS | zCC2regRS | zCC2irregRS | zCC2nwRS | zWASIvRS | zBPVSRS | mean_z_vocab | mean_z_read | zConsistency_H |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| EOF001 | 1 | Study1&2 | explicit | a | Accolade | 1.9142393 | absent | Orth_sp | 0 | 15 | 62 | 33 | 39 | 27 | 30 | 26 | 126 | acalade | 2 | -1.409869 | -0.8895032 | 0.7889916 | 0.4771563 | -0.3286222 | -0.6258886 | -0.3484719 | -0.4871803 | -0.2723693 | -0.4095955 |

| EOF001 | 1 | Study1&2 | explicit | a | Cataclysm | 3.5060075 | present | Orth_sp | 1 | 15 | 62 | 33 | 39 | 27 | 30 | 26 | 126 | Cataclysm | 0 | -1.409869 | -0.8895032 | 0.7889916 | 0.4771563 | -0.3286222 | -0.6258886 | -0.3484719 | -0.4871803 | -0.2723693 | 1.1763372 |

| EOF001 | 1 | Study1&2 | explicit | a | Contrition | 1.7486898 | absent | Orth_sp | 1 | 15 | 62 | 33 | 39 | 27 | 30 | 26 | 126 | Contrition | 0 | -1.409869 | -0.8895032 | 0.7889916 | 0.4771563 | -0.3286222 | -0.6258886 | -0.3484719 | -0.4871803 | -0.2723693 | -0.5745381 |

| EOF001 | 1 | Study1&2 | explicit | a | Debacle | 2.9008386 | present | Orth_sp | 0 | 15 | 62 | 33 | 39 | 27 | 30 | 26 | 126 | dibarcle | 2 | -1.409869 | -0.8895032 | 0.7889916 | 0.4771563 | -0.3286222 | -0.6258886 | -0.3484719 | -0.4871803 | -0.2723693 | 0.5733869 |

| EOF001 | 1 | Study1&2 | explicit | a | Dormancy | 1.6263089 | absent | Orth_sp | 0 | 15 | 62 | 33 | 39 | 27 | 30 | 26 | 126 | doormensy | 3 | -1.409869 | -0.8895032 | 0.7889916 | 0.4771563 | -0.3286222 | -0.6258886 | -0.3484719 | -0.4871803 | -0.2723693 | -0.6964704 |

| EOF001 | 1 | Study1&2 | explicit | a | Epigram | 1.3822337 | present | Orth_sp | 1 | 15 | 62 | 33 | 39 | 27 | 30 | 26 | 126 | Epigram | 0 | -1.409869 | -0.8895032 | 0.7889916 | 0.4771563 | -0.3286222 | -0.6258886 | -0.3484719 | -0.4871803 | -0.2723693 | -0.9396508 |

| EOF001 | 1 | Study1&2 | explicit | a | Foible | 2.7051987 | present | Orth_sp | 1 | 15 | 62 | 33 | 39 | 27 | 30 | 26 | 126 | Foible | 0 | -1.409869 | -0.8895032 | 0.7889916 | 0.4771563 | -0.3286222 | -0.6258886 | -0.3484719 | -0.4871803 | -0.2723693 | 0.3784641 |

| EOF001 | 1 | Study1&2 | explicit | a | Fracas | 3.1443345 | absent | Orth_sp | 0 | 15 | 62 | 33 | 39 | 27 | 30 | 26 | 126 | fracar | 1 | -1.409869 | -0.8895032 | 0.7889916 | 0.4771563 | -0.3286222 | -0.6258886 | -0.3484719 | -0.4871803 | -0.2723693 | 0.8159901 |

| EOF001 | 1 | Study1&2 | explicit | a | Lassitude | 0.9048202 | present | Orth_sp | 0 | 15 | 62 | 33 | 39 | 27 | 30 | 26 | 126 | lacitude | 2 | -1.409869 | -0.8895032 | 0.7889916 | 0.4771563 | -0.3286222 | -0.6258886 | -0.3484719 | -0.4871803 | -0.2723693 | -1.4153141 |

| EOF001 | 1 | Study1&2 | explicit | a | Luminary | 1.0985931 | absent | Orth_sp | 0 | 15 | 62 | 33 | 39 | 27 | 30 | 26 | 126 | loomenery | 4 | -1.409869 | -0.8895032 | 0.7889916 | 0.4771563 | -0.3286222 | -0.6258886 | -0.3484719 | -0.4871803 | -0.2723693 | -1.2222516 |

| EOF001 | 1 | Study1&2 | explicit | a | Nonentity | 3.9681391 | absent | Orth_sp | 0 | 15 | 62 | 33 | 39 | 27 | 30 | 26 | 126 | nonenterty | 2 | -1.409869 | -0.8895032 | 0.7889916 | 0.4771563 | -0.3286222 | -0.6258886 | -0.3484719 | -0.4871803 | -0.2723693 | 1.6367746 |

| EOF001 | 1 | Study1&2 | explicit | a | Platitude | 0.9048202 | present | Orth_sp | 1 | 15 | 62 | 33 | 39 | 27 | 30 | 26 | 126 | Platitude | 0 | -1.409869 | -0.8895032 | 0.7889916 | 0.4771563 | -0.3286222 | -0.6258886 | -0.3484719 | -0.4871803 | -0.2723693 | -1.4153141 |

| EOF001 | 1 | Study1&2 | explicit | a | Propensity | 1.6861898 | absent | Orth_sp | 0 | 15 | 62 | 33 | 39 | 27 | 30 | 26 | 126 | propencity | 1 | -1.409869 | -0.8895032 | 0.7889916 | 0.4771563 | -0.3286222 | -0.6258886 | -0.3484719 | -0.4871803 | -0.2723693 | -0.6368090 |

| EOF001 | 1 | Study1&2 | explicit | a | Raconteur | 3.8245334 | absent | Orth_sp | 0 | 15 | 62 | 33 | 39 | 27 | 30 | 26 | 126 | raconter | 1 | -1.409869 | -0.8895032 | 0.7889916 | 0.4771563 | -0.3286222 | -0.6258886 | -0.3484719 | -0.4871803 | -0.2723693 | 1.4936954 |

| EOF001 | 1 | Study1&2 | explicit | a | Syncopation | 3.0436450 | present | Orth_sp | 0 | 15 | 62 | 33 | 39 | 27 | 30 | 26 | 126 | sincipation | 2 | -1.409869 | -0.8895032 | 0.7889916 | 0.4771563 | -0.3286222 | -0.6258886 | -0.3484719 | -0.4871803 | -0.2723693 | 0.7156697 |

| EOF001 | 1 | Study1&2 | explicit | a | Veracity | 2.8693837 | present | Orth_sp | 0 | 15 | 62 | 33 | 39 | 27 | 30 | 26 | 126 | varacity | 1 | -1.409869 | -0.8895032 | 0.7889916 | 0.4771563 | -0.3286222 | -0.6258886 | -0.3484719 | -0.4871803 | -0.2723693 | 0.5420473 |

You can see that for EOF001, as for every participant, we have information on the conditions under which we observed their responses (Instructions, Orthography), as well as information about the stimuli that we asked participants to respond to (e.g., Word, Consistency_H), information about the responses or outcomes we recorded (Measure, Score, Spelling.transcription, Levenshtein.Score), and information about the participants themselves (e.g., TOWREsweRS, TOWREpdeRS).

Process the data

We almost always need to process data in order to render the information ready for discovery or communication data visualization.

Specify column data types

You will have seen that data processing began when we first read the data in for use. Let’s go back and take a look at the code steps.

conc.orth <- read_csv("concurrent.orth_2020-08-11.csv",

col_types = cols(

Participant = col_factor(),

Time = col_factor(),

Study = col_factor(),

Instructions = col_factor(),

Version = col_factor(),

Word = col_factor(),

Orthography = col_factor(),

Measure = col_factor(),

Spelling.transcription = col_factor()

)

)The chunk of code is doing two things: first, we tell R what .csv file we want to read into the environment, and what we want to call the dataset; and then we tell R how we want to classify the data variable columns.

conc.orth <- read_csv("concurrent.orth_2020-08-11.csv"first reads the named.csvfile, creating an object I will callconc.orth: a dataset or tibble we can now work with in R.

- You have been using the

read.csv()function to read in data files. - The

read_csv()function is the more moderntidyverseform of the function you were introduced to. - Both versions work in similar ways but

read_csv()is a bit more efficient, and it allows us to do what we do next.

col_types = cols( ... )tells R how to interpret some of the columns in the .csv.

- The

read_csv()function is excellent at working out what types of data are held in each column but sometimes we have to tell it what to do. - Here, I am specifying with e.g.

Participant = col_factor()that theParticipantcolumn should be treated as a categorical or nominal variable, a factor.

Using the col_types = cols( ... ) argument saves me from having to first read the data in then using code like the following to require, technically, coerce R into recognizing the nominal nature of variables like Participant with code like

conc.orth$Participant <- as.factor(conc.orth$Participant)Exercise

I do not have to do step 2 of the read-in process, here. What happens if we use just read_csv()? Try it.

conc.orth <- read_csv("concurrent.orth_2020-08-11.csv")Further information

You can read more about read_csv() here

You can read more about col_types = cols() here

Extract information from the dataset

The @ricketts2021 dataset orth.conc is a moderately sized and rich dataset with several observations, on multiple variables, for each of many participants. Sometimes, we want to extract information from a more complex dataset because we want to understand or present a part of it, or a relatively simple account of it. We look at an example of how you might do that now.

As you saw when you looked at the summary of the orth.conc dataset, we have multiple rows of data for each participant. Recall the design of the study. For each participant, we recorded their response to a stimulus word, in a test of word learning, for 16 words.

For each participant, we have a separate row for each response the participant made to each word. But you will have noticed that information about the participant is repeated. So, for participant EOF001, we have data about their performance e.g. on the BPVSRS vocabulary test (they scored 126). Notice that that score is repeated: the same value is copied for each row, for this participant, in the BPVSRS column. The reason the data are structured like this are not relevant here 1 but it does require us to do some data processing, as I explain next.

It is a very common task to want to present a summary of the attributes of your participants or stimuli when you are reporting data in a report of a psychological research project. We could get a summary of the participant attributes using the psych library describe function as follows.

conc.orth %>%

select(WASImRS:BPVSRS) %>%

describe(ranges = FALSE, skew = FALSE) vars n mean sd se

WASImRS 1 1167 16.00 4.30 0.13

TOWREsweRS 2 1167 74.23 8.67 0.25

TOWREpdeRS 3 1167 41.59 9.66 0.28

CC2regRS 4 1167 36.91 2.65 0.08

CC2irregRS 5 1167 25.24 3.70 0.11

CC2nwRS 6 1167 32.01 6.12 0.18

WASIvRS 7 1167 29.12 4.99 0.15

BPVSRS 8 1167 130.87 13.97 0.41But you can see that part of the information in the summary does not appear to make sense at first glance. We do not have 1167 participants in this dataset, as @ricketts2021 report.

How do we extract the participant attribute variable data for each unique participant code for the participants in our dataset?

We create a new dataset conc.orth.subjs by taking conc.orth and piping it through a series of processing steps. As part of the process, we want to extract the data for each unique unique Participant identity code using distinct(). Along the way, we want to calculate the mean accuracy of response on the outcome measure (Score), that is, the average number of edits separating a child’s spelling of a target word from the correct spelling.

This is how we do it.

conc.orth.subjs <- ...tells R to create a new datasetconc.orth.subjs.conc.orth %>% ...we do this by telling R to takeconc.orthand pipe it through the following steps.group_by(Participant) %>%first we group the data byParticipantidentity code.mutate(mean.score = mean(Score)) %>%then we usemutate()to create the new variablemean.scoreby calculating themean()of theScorevariable values (i.e. the average score) for each participant. We then pipe to the next step.ungroup() %>%we tell R to ungroup the data because we want to work with all rows for what comes next, and we then pipe to the next step.distinct(Participant, .keep_all = TRUE) %>%requires R to extract from the fullorth.concdataset the set of (here, 16) data rows we have for each distinct (uniquely identified)Participant. We use the argument.keep_all = TRUEto tell R that we want to keep all columns. This requires the next step, so we tell R to pipe%>%the data.select(WASImRS:BPVSRS, mean.score, Participant)then tells R to select just the columns with information about participant attributes.(WASImRS:BPVSRStells R to select every column betweenWASImRSandBPVSRSinclusive.mean.score, Participanttells R we also want those columns, specified by name, including themean.scorecolumn of average response scores we calculated just earlier.

We can now get a sensible summary of the descriptive statistics for the participants in Study 2 of the @ricketts2021 investigation.

conc.orth.subjs %>%

select(-Participant) %>%

describe(ranges = FALSE, skew = FALSE) vars n mean sd se

WASImRS 1 73 16.00 4.33 0.51

TOWREsweRS 2 73 74.22 8.73 1.02

TOWREpdeRS 3 73 41.58 9.73 1.14

CC2regRS 4 73 36.90 2.67 0.31

CC2irregRS 5 73 25.23 3.72 0.44

CC2nwRS 6 73 32.00 6.17 0.72

WASIvRS 7 73 29.12 5.02 0.59

BPVSRS 8 73 130.88 14.06 1.65

mean.score 9 73 1.38 0.62 0.07This is exactly the kind of tabled summary of descriptive statistics we would expect to produce in a report, in a presentation of the participant characteristics for a study sample (in e.g., the Methods section).

Notice:

- The table has not yet been formatted according to APA rules.

- We would prefer to use real words for row name labels instead of dataset variable column labels, e.g, replace

TOWREsweRSwith: “TOWRE word reading score”.

Exercise

In these bits of demonstration code, we extract information relating just to participants. However, in this study, we recorded the responses participants made to 16 stimulus words, and we include in the dataset information about the word properties Consistency_H.

- Can you adapt the code you see here in order to calculate a mean score for each word, and then extract the word-level information for each distinct stimulus word identity?

Further information

You can read more about the psych library, which is often useful, here. You can read more about the distinct() function here.

Visualize the data: introduction

It has taken us a while but now we are ready to examine the data using visualizations. Remember, we are engaging in visualization to (1.) do discovery, to get a sense of our data, and maybe reveal unexpected aspects, and (2.) potentially to communicate to ourselves and others what we have observed or perhaps what insights we can gain.

We have been learning to use histograms, in other classes, so let’s start there.

Examine the distributions of numeric variables

We can use histograms to visualize the distribution of observed values for a numeric variable. Let’s start simple, and then explore how to elaborate the plotting code, in a series of edits, to polish the plot presentation.

This is how the code works.

ggplot(data = conc.orth.subjs, ...tells R what function to useggplot()and what data to work withdata = conc.orth.subjs.aes(x = WASImRS)tells R what aesthetic mapping to use: we want to map values on theWASImRSvariable (small to large) to locations on the x-axis (left to right).geom_histogram()tells R to construct a histogram, presenting a statistical summary of the distribution of intelligence scores.

With histograms, we are visualizing the distribution of a single continuous variable by dividing the variable values into bins (i.e. subsets) and counting the number of observations in each bin. Histograms display the counts with bars.

You can see more information about geom_histogram here.

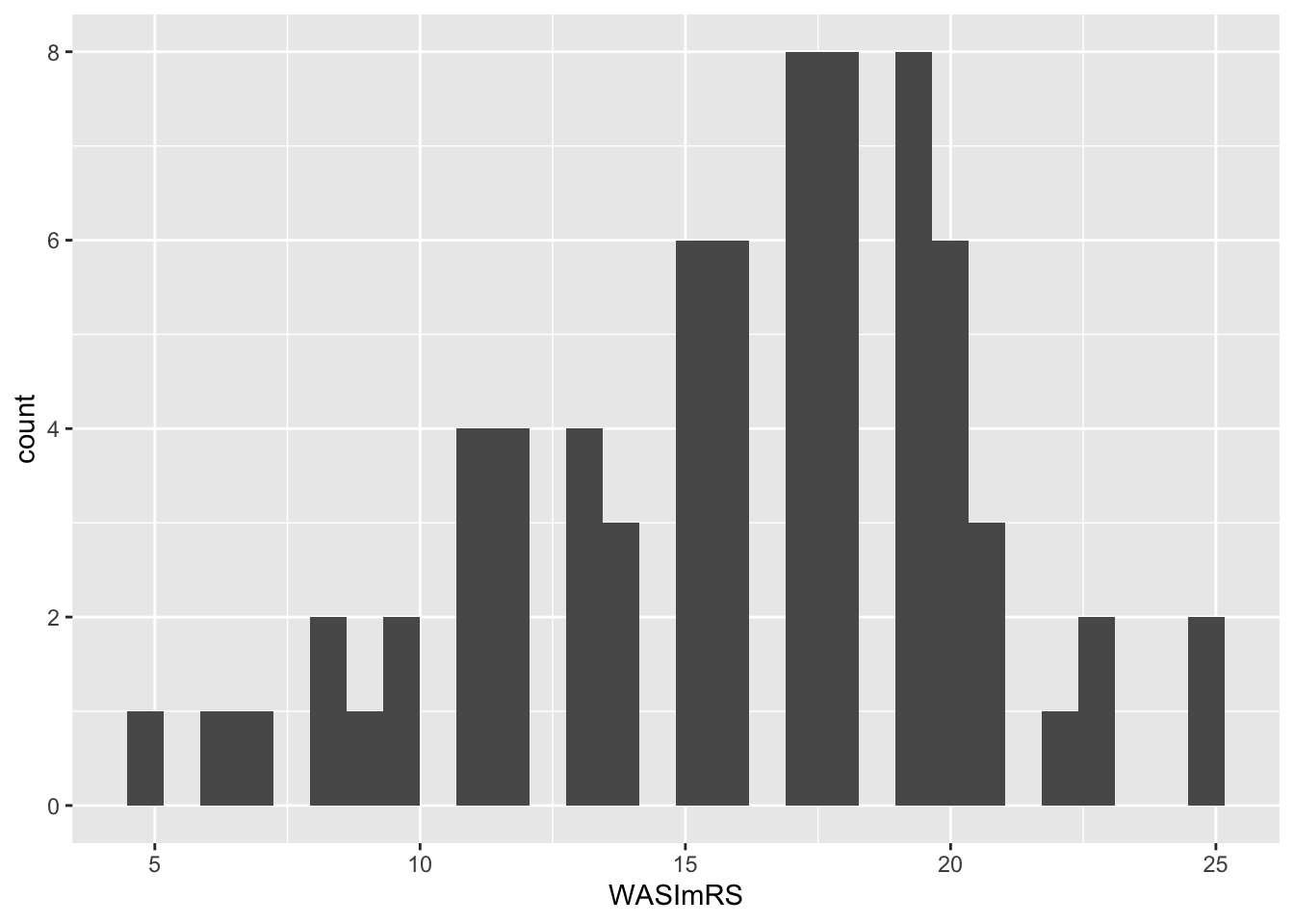

Figure 5 shows how intelligence (WASImRS) scores vary in the Ricketts Study 2 dataset. Scores peak around 17, with a long tail of lower scores towards 5, and a maximum around 25.

Where I use the word “peak” I am talking about the tallest bar in the plot (or, later the highest point in a density curve). At this point, we have the most observations of the value under the bar. Here, we observed the score WASImRS \(= 17\) for the most children in this sample.

A primary function of discovery visualization is to assess whether the distribution of scores on a variable is consistent with expectations, granted assumptions about a sample (e.g., that the children are typically developing). We would normally use research area knowledge to assess whether this distribution fits expectations for a sample of typically developing school-aged children in the UK. However, I shall leave that concern aside, here, so that we can focus on enriching the plot presentation, next.

There are two main problems with the plot:

- The bars are “gappy” in the histogram, suggesting we have not grouped observed values in sufficiently wide subsets (bins). This is a problem because it weakens our ability to gain or communicate a visual sense of the distribution of scores.

- The axis labeling uses the dataset variable name

WASImRSbut if we were to present the plot to others we could not expect them to know what that means.

We can fix both these problems, and polish the plot for presentation, through the following code steps.

ggplot(data = conc.orth.subjs, aes(x = WASImRS)) +

geom_histogram(binwidth = 2) +

labs(x = "Scores on the Wechsler Abbreviated Scale of Intelligence") +

theme_bw()

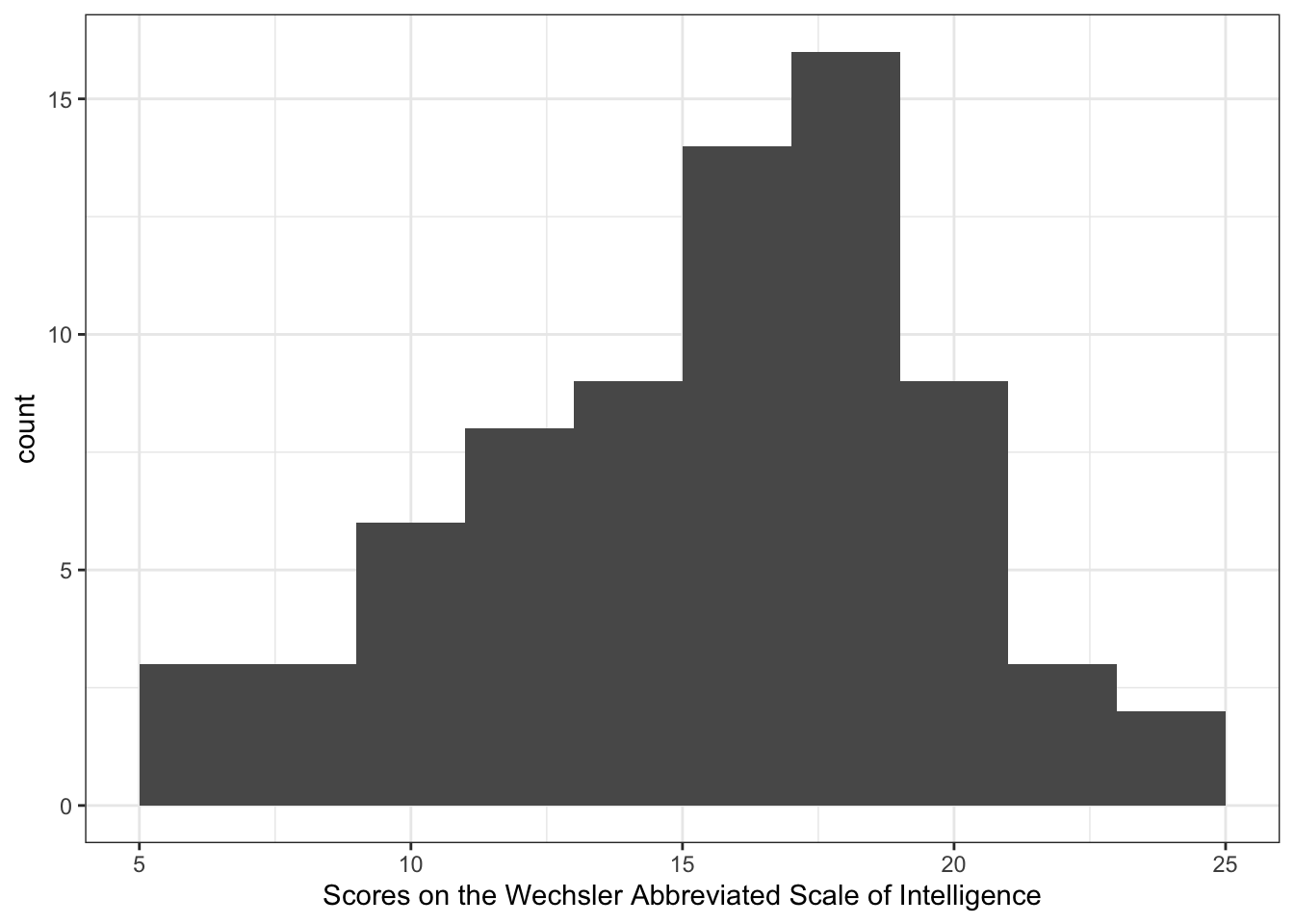

Figure 6 shows the same data, and furnishes us with the same picture of the distribution of intelligence scores but it is a bit easier to read. We achieve this by making three edits.

geom_histogram(binwidth = 2) +we change thebinwidth.

- This is so that more different observed values of the data variable are included in bins (subsets corresponding to bars) so that the bars correspond to information about a wider range of values.

- This makes the bars bigger, wider, and closes the gaps.

- And this means we can focus the eyes of the audience for our plot on the visual impression we wish to communicate: the skewed distribution of intelligence scores.

labs(x = "Scores on the Wechsler Abbreviated Scale of Intelligence") +changes the label to something that should be understandable by people, in our audience, who do not have access to variable information (as we do) about the dataset.theme_bw()we change the overall appearance of the plot by changing the theme.

Exercise

We could, if we wanted, add a line and annotation to indicate the mean value, as you saw in Figure 2.

- Can you add the necessary code to indicate the mean value of WASI scores, for this plot?

We can, of course, plot histograms to indicate the distributions of other variables.

- Can you apply the histogram code to plot histograms of other variables?

Comparing the distributions of numeric variables

We may wish to discover or communicate how values vary on dataset variables in two different ways. Sometimes, we need to examine how values vary on different variables. And sometimes, we need to examine how values vary on the same variable but in different groups of participants (or stimuli) or under different conditions. We look at this next. We begin by looking at how you might compare how values vary on different variables.

Compare how values vary on different variables

It can be useful to compare the distributions of different variables. Why?

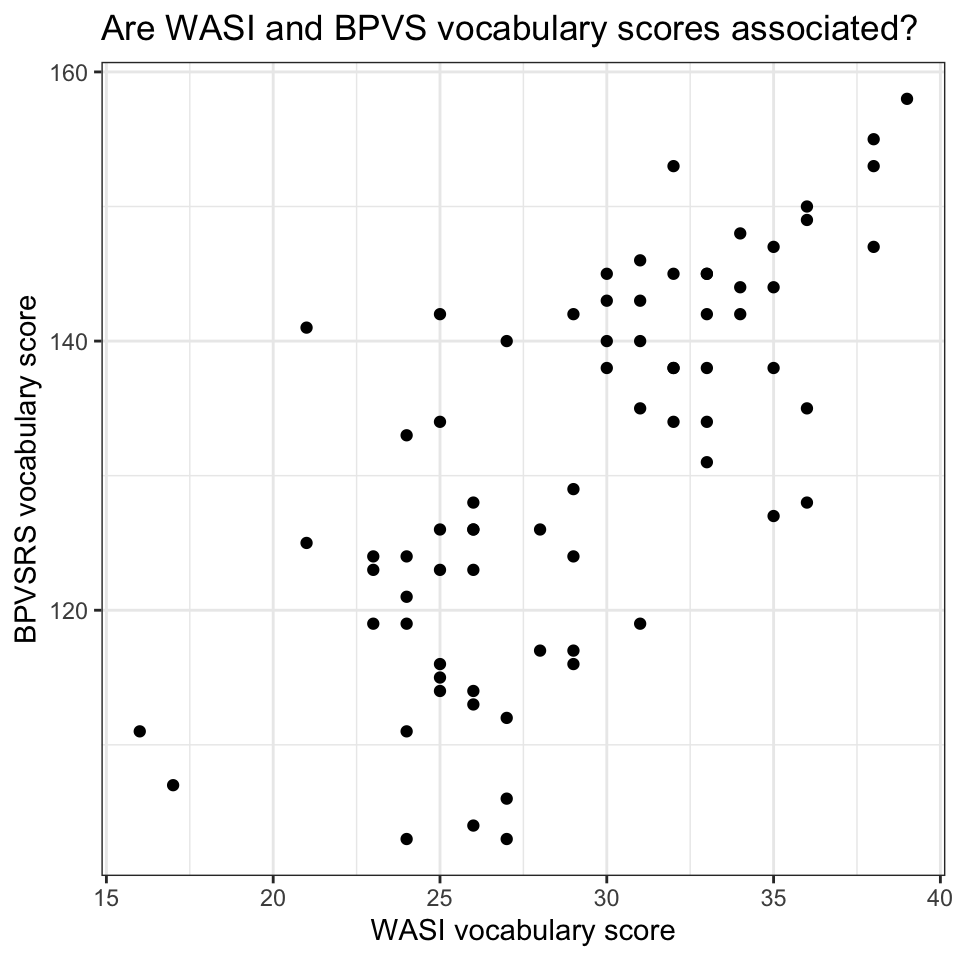

Consider the @ricketts2021 investigation dataset. Like many developmental investigations (see also clinical investigations), we tested children and recorded their scores on a series of standardized measures, here, measures of ability on a range of dimensions. We did this, in part, to establish that the children in our sample are operating at about the level one might expect for typically developing children in cognitive ability dimensions of interest: dimensions like intelligence, reading ability or spelling ability. So, one of the aspects of the data we are considering is whether scores on these dimensions are higher or lower than typical threshold levels. But we also want to examine the distributions of scores because we want to find out:

- if participants are varied in ability (wide distribution) or if maybe they are all similar (narrow distribution) as would be the case if the ability measures are too easy (so all scores are at ceiling) or too hard (so all scores are at floor);

- if there are subgroups within the sample, maybe reflected by two or more peaks;

- if there are unusual scores, maybe reflected by small peaks at very low or very high scores.

We could look at each variable, one plot at a time. Instead, next, I will show you how to produce a set of histogram plots, and present them all as a single grid of plots.

I have to warn you that the way I write the code is not good practice. The code is written with repeats of the ggplot() block of code to produce each plot. This repetition is inefficient and leaves the coding vulnerable to errors because it is hard to spot a mistake in more code. What I should do is encapsulate the code as a function (see here). The reason I do not, here, is because I want to focus our attention on just the plotting.

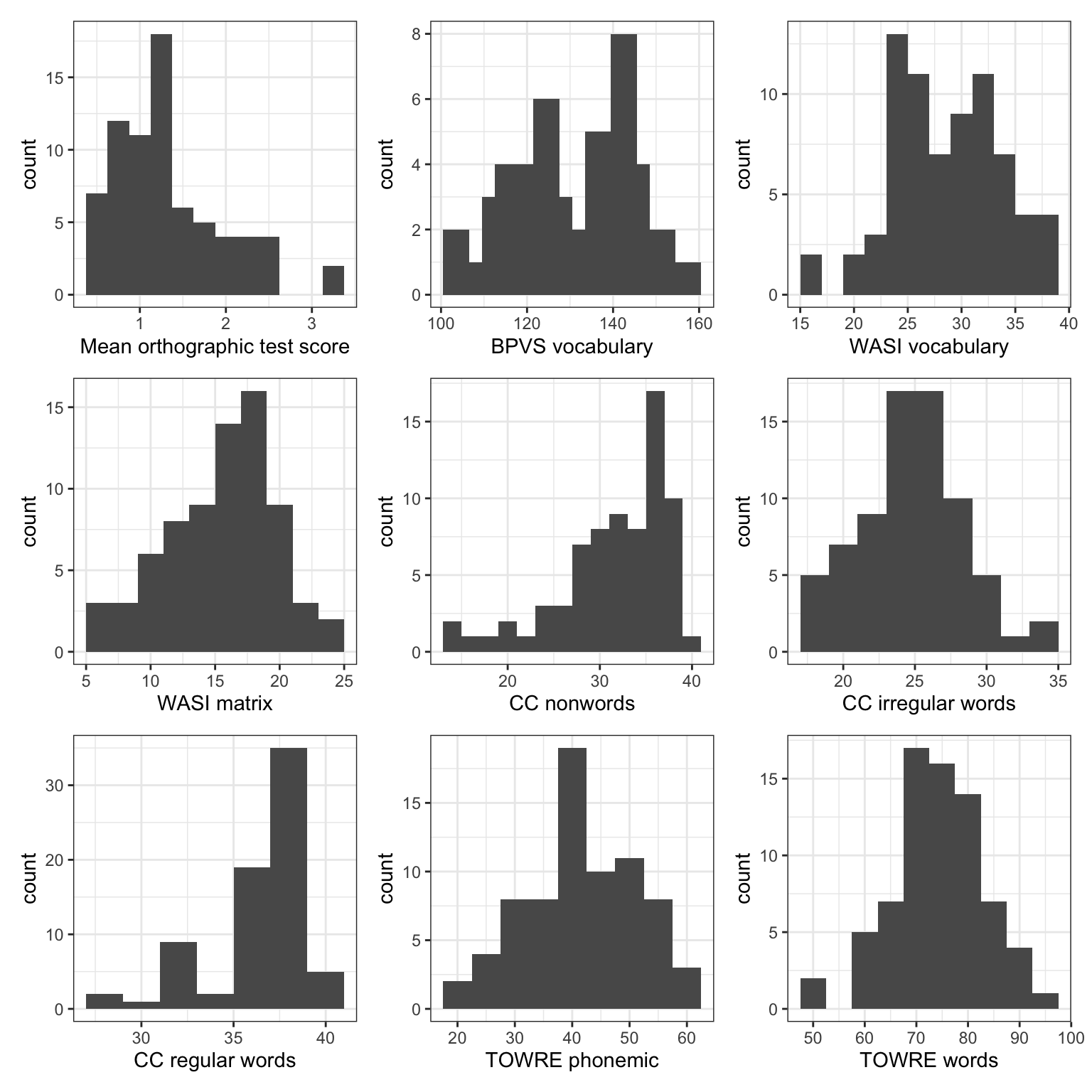

Figure 7 presents a grid of plots showing how scores vary for each ability test measure, for the children in the @ricketts2021 investigation dataset. We need to go through the code steps, next, and discuss what the plots show us (discovery and communication).

p.WASImRS <- ggplot(data = conc.orth.subjs, aes(x = WASImRS)) +

geom_histogram(binwidth = 2) +

labs(x = "WASI matrix") +

theme_bw()

p.TOWREsweRS <- ggplot(data = conc.orth.subjs, aes(x = TOWREsweRS)) +

geom_histogram(binwidth = 5) +

labs(x = "TOWRE words") +

theme_bw()

p.TOWREpdeRS <- ggplot(data = conc.orth.subjs, aes(x = TOWREpdeRS)) +

geom_histogram(binwidth = 5) +

labs(x = "TOWRE phonemic") +

theme_bw()

p.CC2regRS <- ggplot(data = conc.orth.subjs, aes(x = CC2regRS)) +

geom_histogram(binwidth = 2) +

labs(x = "CC regular words") +

theme_bw()

p.CC2irregRS <- ggplot(data = conc.orth.subjs, aes(x = CC2irregRS)) +

geom_histogram(binwidth = 2) +

labs(x = "CC irregular words") +

theme_bw()

p.CC2nwRS <- ggplot(data = conc.orth.subjs, aes(x = CC2nwRS)) +

geom_histogram(binwidth = 2) +

labs(x = "CC nonwords") +

theme_bw()

p.WASIvRS <- ggplot(data = conc.orth.subjs, aes(x = WASIvRS)) +

geom_histogram(binwidth = 2) +

labs(x = "WASI vocabulary") +

theme_bw()

p.BPVSRS <- ggplot(data = conc.orth.subjs, aes(x = BPVSRS)) +

geom_histogram(binwidth = 3) +

labs(x = "BPVS vocabulary") +

theme_bw()

p.mean.score <- ggplot(data = conc.orth.subjs, aes(x = mean.score)) +

geom_histogram(binwidth = .25) +

labs(x = "Mean orthographic test score") +

theme_bw()

p.mean.score + p.BPVSRS + p.WASIvRS + p.WASImRS +

p.CC2nwRS + p.CC2irregRS + p.CC2regRS +

p.TOWREpdeRS + p.TOWREsweRS + plot_layout(ncol = 3)

This is how the code works, step by step:

p.WASImRS <- ggplot(...)first creates a plot object, which we callp.WASImRS.ggplot(data = conc.orth.subjs, aes(x = WASImRS)) +tells R what data to use, and what aesthetic mapping to work with mapping the variableWASImRShere to the x-axis location.geom_histogram(binwidth = 2) +tells R to sort the values ofWASImRSscores into bins and create a histogram to show how many children in the sample present scores of different sizes.labs(x = "WASI matrix") +changes the x-axis label to make it more informative.theme_bw()changes the theme to make it a bit cleaner looking.

We do this bit of code separately for each variable. We change the plot object name, the x = variable specification, and the axis label text for each variable. We adjust the binwidth where it appears to be necessary.

We then use the following plot code to put all the plots together in a single grid.

p.mean.score + p.BPVSRS + p.WASIvRS + p.WASImRS +

p.CC2nwRS + p.CC2irregRS + p.CC2regRS +

p.TOWREpdeRS + p.TOWREsweRS + plot_layout(ncol = 3)- In the code, we add a series of plots together e.g.

p.mean.score + p.BPVSRS + p.WASIvRS ... - and then specify we want a grid of plots with a layout of three columns

plot_layout(ncol = 3).

This syntax requires the library(patchwork) and more information about this very useful library can be found here.

What do the plots show us?

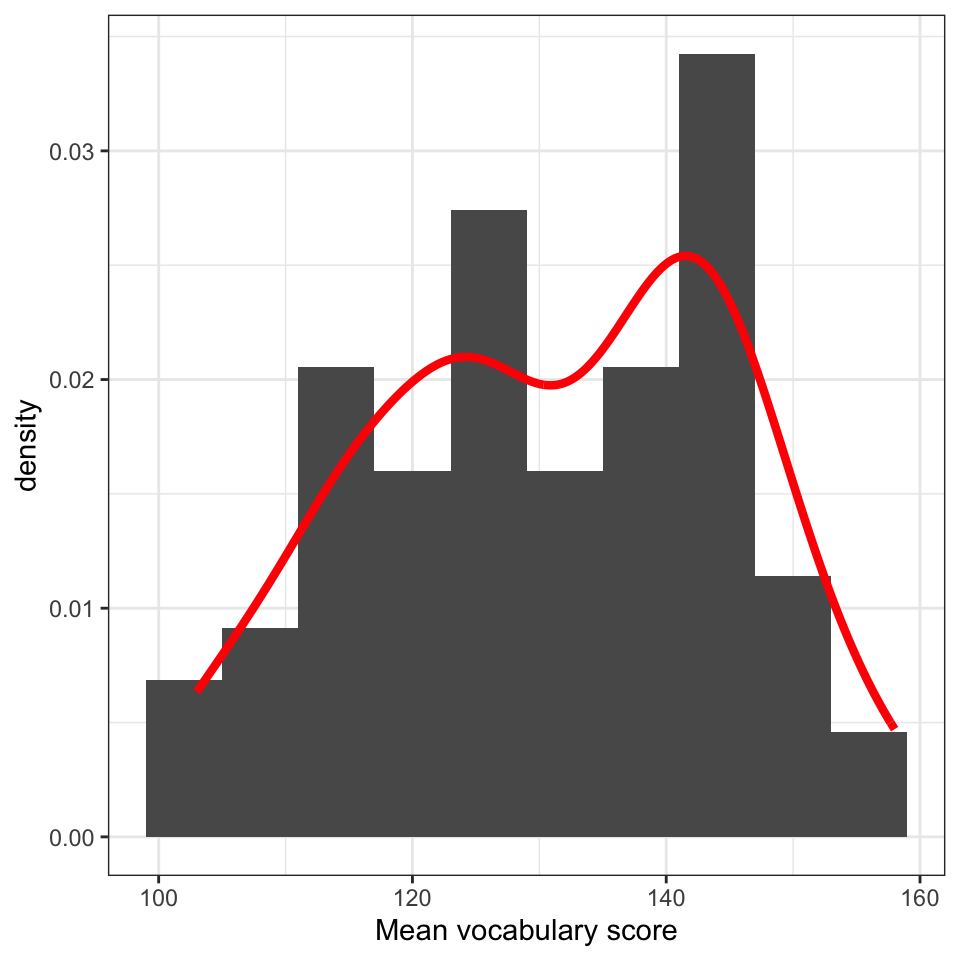

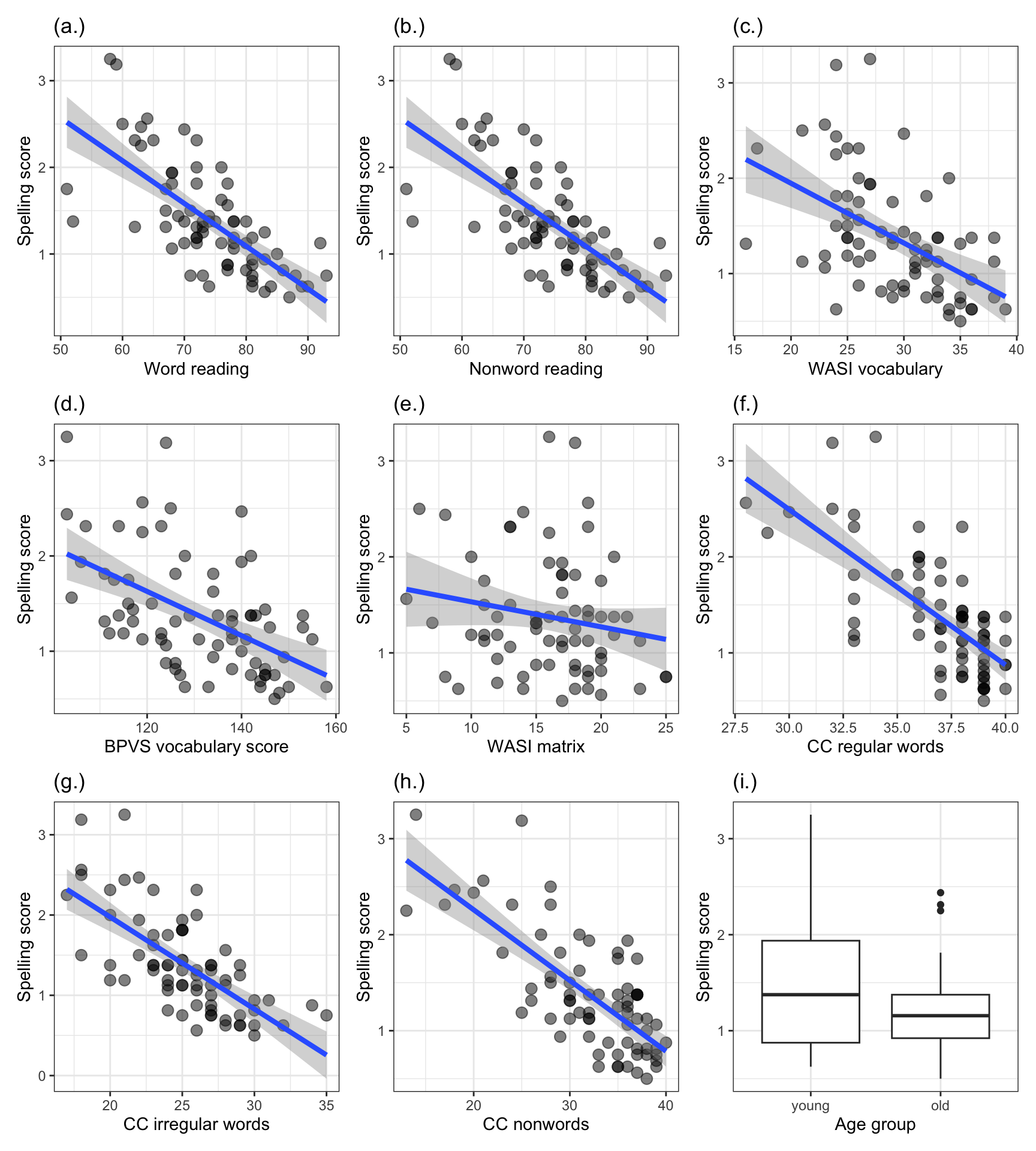

Figure 7 shows a grid of 9 histogram plots. Each plot presents the distribution of scores for the @ricketts2021 Study 2 participant sample on a separate ability measure, including scores on the BPVS vocabulary, WASI vocabulary, TOWRE words and TOWRE nonwords reading tests, as well as scores on the Castles and Coltheart regular words, irregular words and nonwords reading tests, and the mean Levenshtein distance (spelling score) outcome measure of performance for the experimental word learning post-test.

Take a look, you may notice the following features.

- The mean orthographic test score suggests that many children produced spellings to the words they learned in the @ricketts2021 study that, on average, were correct (0 edits) or were one or two edits (e.g., a letter deletion or replacement) away from the target word spelling. The children were learning the words, and most of the time, they learned the spellings of the words effectively. However, one or two children tended to produce spellings that were 2-3 edits distant from the target spelling.

- We can see these features because we can see that the histogram peaks around 1 (at Levenshtein distance score \(= 1\)) but that there is a small bar of scores at around 3.

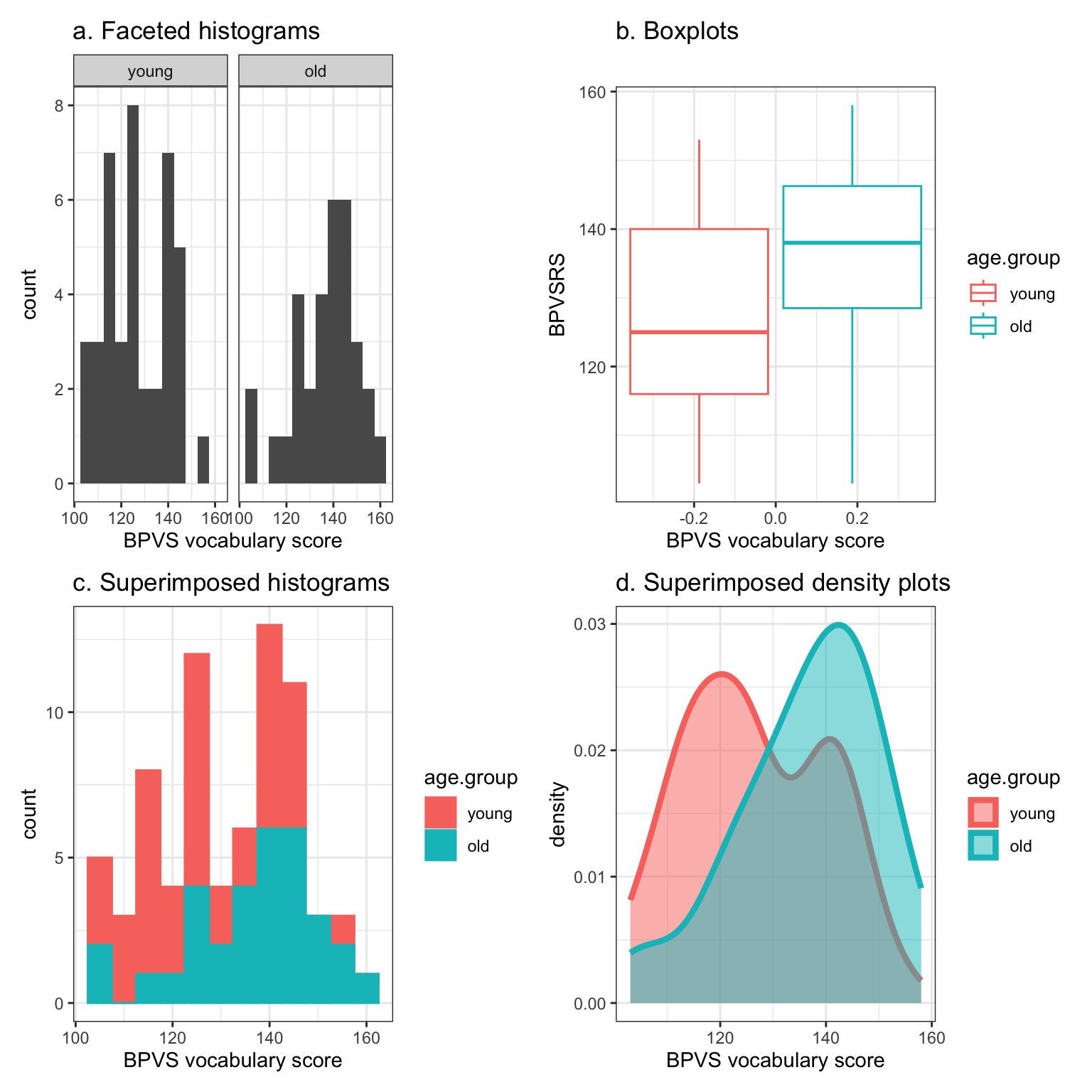

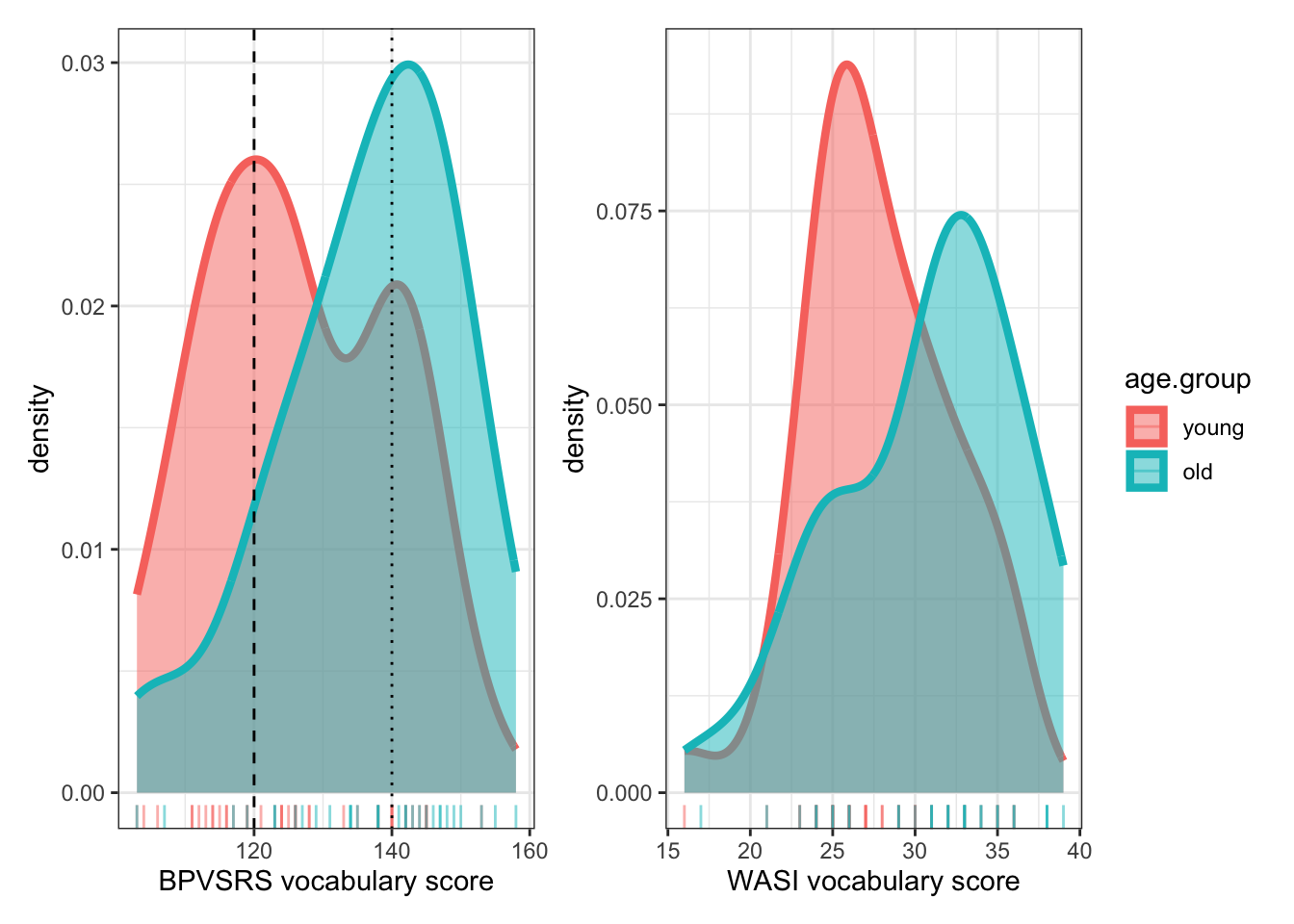

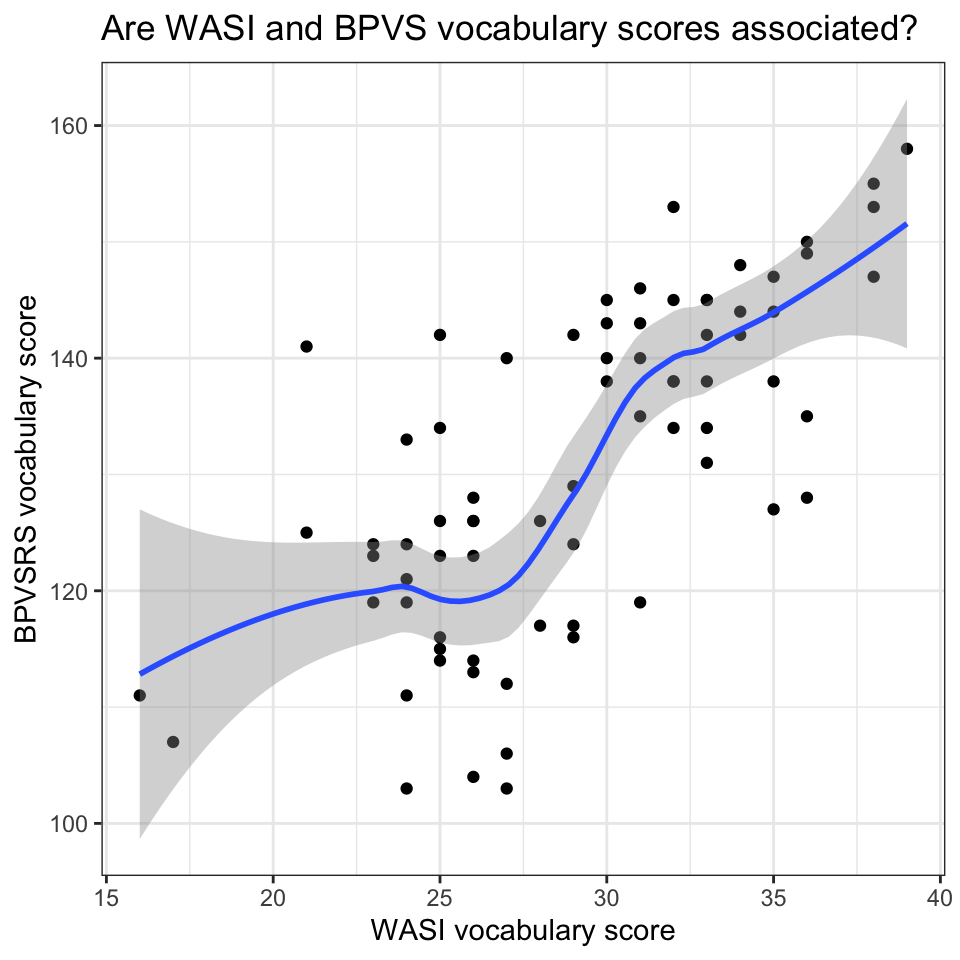

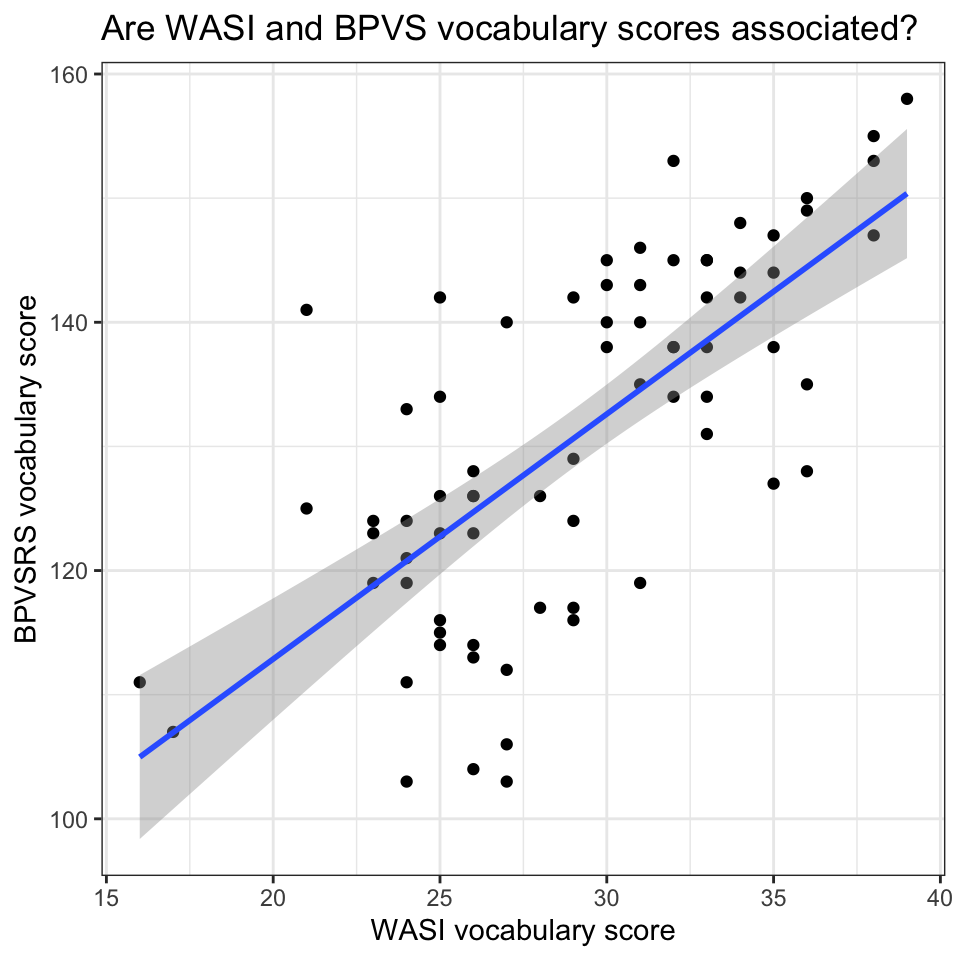

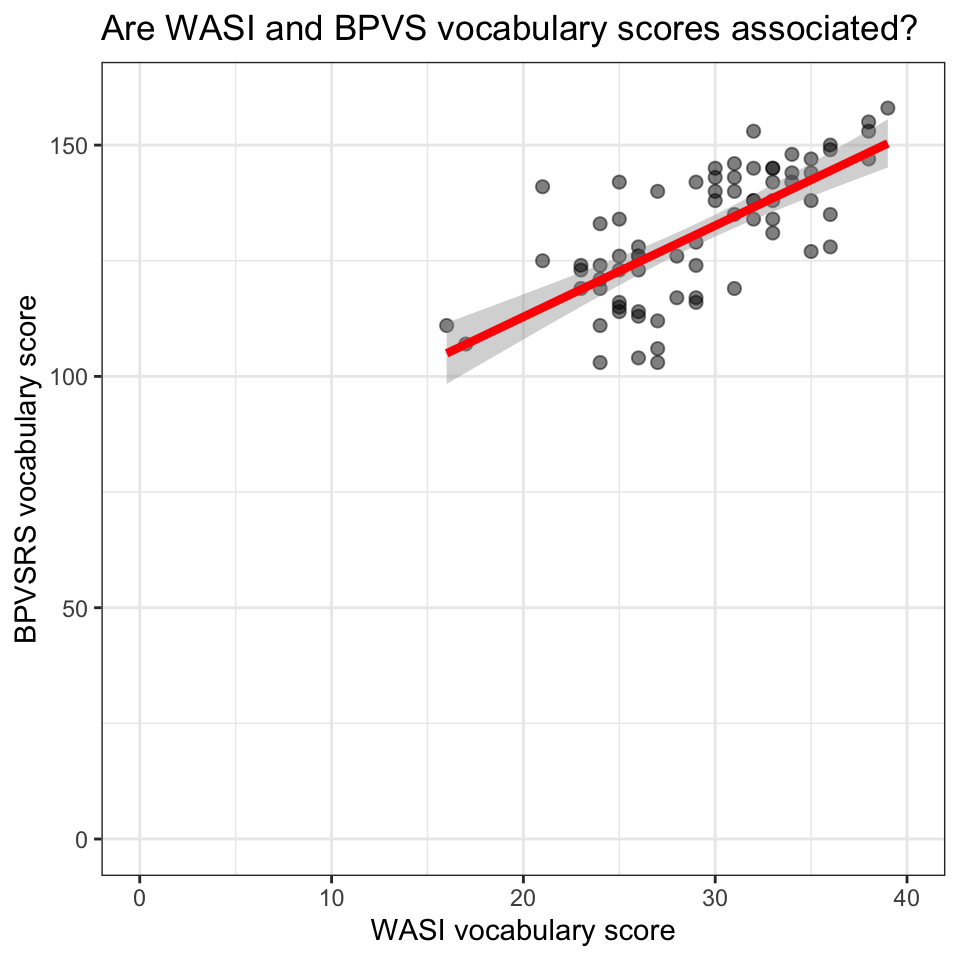

- We can see that there are two peaks on the BPVS and WASI measures of vocabulary. What is going on there?

- Is it the case that we have two sub-groups of children within the overall sample? For example, on the BPVS test, maybe one sub-group of children has a distribution of vocabulary scores with a peak around 120 (the peak shows where most children have scores) while another sub-group of children has a distribution of vocabulary scores with a peak around 140.

- If we look at the CC nonwords and CC regular words tests of reading ability, we may notice that while most children present relatively high scores on these tests (CC nonwords peak around 35, CC regular words peak around 37) there is a skewed distribution. Many of the children’s scores are piled up towards the maximum value in the data on the measures. But we can also see that, on both measures, there are long tails in the distributions because relatively small numbers of children have substantially lower scores.

- Developmental samples are often highly varied (just like clinical samples). Are all the children in the sample at the same developmental stage, or are they all typically developing?

Notice that in presenting a grid of plots like this, we offer a compact visual way to present the same summary information we might otherwise present using a table of descriptive statistics. In some ways, this grid of plots is more informative than the descriptive statistics because the mean and SD values do not tell you what you can see:

- the characteristics of the variation in values, like the presence of two peaks;

- or the presence of unusually high or low scores (for this sample).

Grids of plots like this can be helpful to inspect the distributions of variables in a concise approach. They are not really too useful for comparing the distributions because they require your eyes to move between plots, repeatedly, to do the comparison.

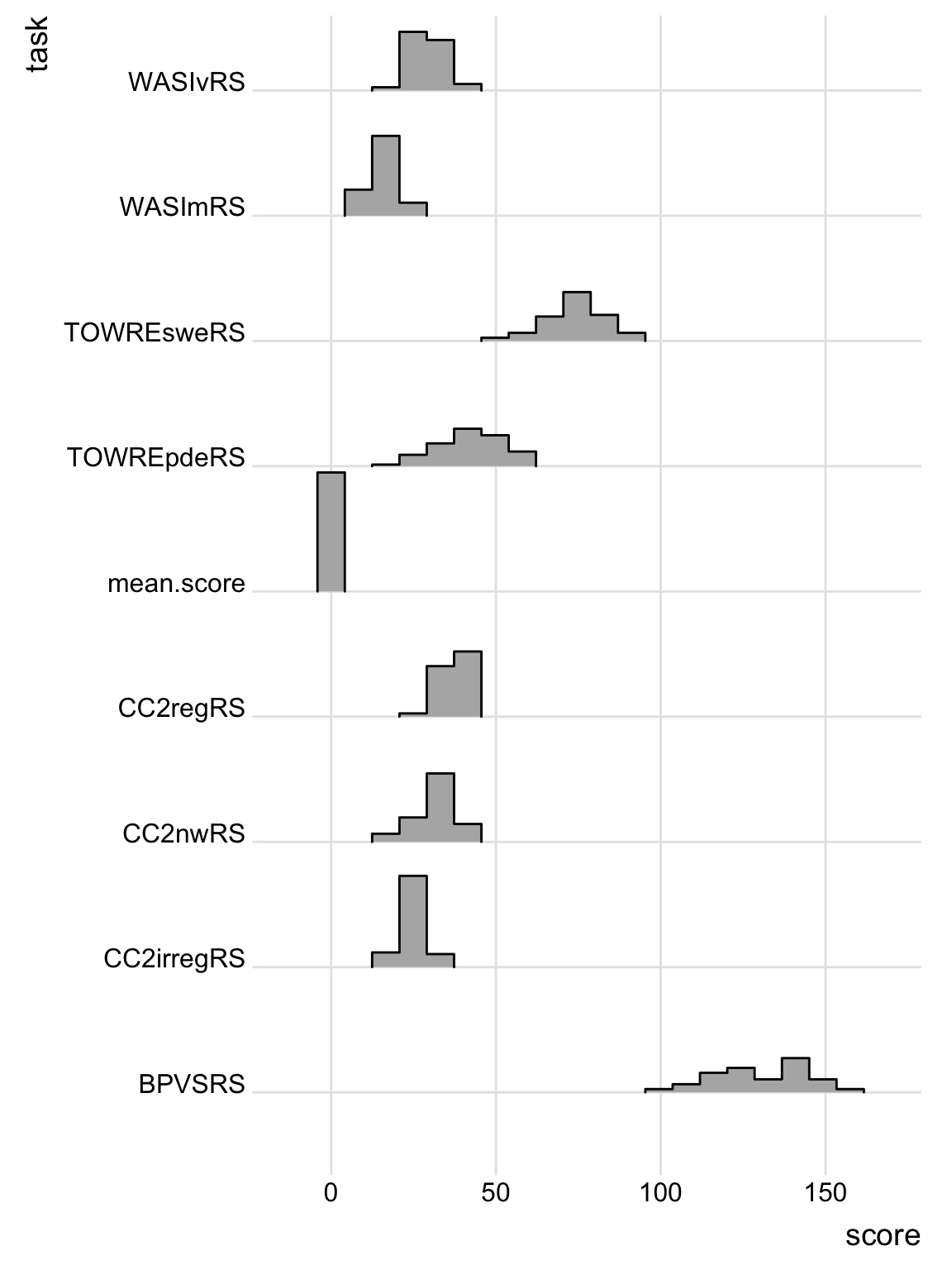

Here is a more compact way to code the grid of histograms using the library(ggridges) function geom_density_ridges(). I do not discuss it in detail because I want to focus your attention on core tidyverse functions (I show you more information in the Notes tab).

Notice that if you produce all the plots so that the are in line in the same column with a shared x-axis it becomes much easier to compare the distributions of scores. You lose some of the fine detail, discussed in relation to Figure 7, but this style allows you to gain an impression, quickly, of how for distributions of scores compare between measures. For example, we can see that within the Castles and Coltheart (CC) measures of reading ability, children do better on regular words than on nonwords, and on nonwords better than on irregular words.

library(ggridges)

conc.orth.subjs %>%

pivot_longer(names_to = "task", values_to = "score", cols = WASImRS:mean.score) %>%

ggplot(aes(y = task, x = score)) +

geom_density_ridges(stat = "binline", bins = 20, scale = 0.95, draw_baseline = FALSE) +

theme_ridges()

library(ggridges)get the library we need.conc.orth.subjs %>%pipe the dataset for processing.pivot_longer(names_to = "task", values_to = "score", cols = WASImRS:mean.score) %>%pivot the data so all test scores are in the same column, “scores” wwith coding for “task” name, and pipe to the next step for plotting.ggplot(aes(y = task, x = score)) +create a plot for the scores on each task.geom_density_ridges(stat = "binline", bins = 20, scale = 0.95, draw_baseline = FALSE) +show the plots as histograms.theme_ridges()change the theme to the specific theme suitable for showing a grid of ridges.

You can find more information on ggridges here.

Compare between groups how values vary on different variables

We will often want to compare the distributions of variable values between groups or between conditions. This need may appear when, for example, we are conducting a between-groups manipulation of some condition and we want to check that the groups are approximately matched on dimensions that are potentially linked to outcomes (i.e., on potential confounds). The need may appear when, alternatively, we have recruited or selected participant (or stimulus) samples and we want to check that the sample sub-groups are approximately matched or detectably different on one or more dimensions of interest or of concern.

As a demonstration of the visualization work we can do in such contexts, let’s pick up on an observation we made earlier, that there are two peaks on the BPVS and WASI measures of vocabulary. I asked: Is it the case that we have two sub-groups of children within the overall sample? Actually, we know the answer to that question because @ricketts2021 state that they recruited one set of children for their Study 1 and then, for Study 2:

Thirty-three children from an additional three socially mixed schools in the South-East of England were added to the Study 1 sample (total N = 74). These additional children were older (\(M_{age}\) = 12.57, SD = 0.29, 17 female)

Do the younger (Study 1) children differ in any way from the older (additional) children?

We can check this through data visualization. Our aim is to present the distributions of variables side-by-side or superimposed to ensure easy comparison. We can do this in different ways, so I will demonstrate one approach with an outline explanation of the actions, and offer suggestions for further approaches.

I am going to process the data before I do the plotting. I will re-use the code I used before (see Section 1.7.4.2) with one additional change. I will add a line to create a group coding variable. This addition shows you how to do an action that is very often useful in the data processing part of your workflow.

Data processing

You have seen that the @ricketts2021 report states that an additional group of children was recruited for the investigation’s second study. How do we know who they are? If you recall the summary view of the complete dataset, there is one variable we can use to code group identity.

summary(conc.orth$Study)Study1&2 Study2

655 512 This summary tells us that we have 512 observations concerning the additional group of children recruited for Study 2, and 655 observations for the (younger) children whose data were analyzed for both Study 1 and Study 2 (i.e., coded as Study1&2 in the Study variable column). We can use this information to create a coding variable. (If we had age data, we could use that instead but we do not.) This is how we do that.

conc.orth.subjs <- conc.orth %>%

group_by(Participant) %>%

mutate(mean.score = mean(Levenshtein.Score)) %>%

ungroup() %>%

distinct(Participant, .keep_all = TRUE) %>%

mutate(age.group = fct_recode(Study,

"young" = "Study1&2",

"old" = "Study2"

)) %>%

select(WASImRS:BPVSRS, mean.score, Participant, age.group)The code block is mostly the same as the code I used in Section Section 1.7.4.2 to extract the data for each participant, with two changes:

- First,

mutate(age.group = fct_recode(...)tells R that I want to create a new variableage.groupthrough the process of recoding, withfct_recode(...)the variable I specify next, in the way that I specify. fct_recode(Study, ...)tells R I want to recode the variableStudy."young" = "Study1&2", "old" = "Study2"specifies what I want recoded.

- I am telling R to look in the

Studycolumn and (a.) whenever it finds the valueStudy1&2replace it withyoungwhereas (b.) whenever it finds the valueStudy2replace it withold. - Notice that the syntax in recoding is

fct_recode: “new name” = “old name”. - Having done that, I tell R to pipe the data, including the recoded variable, to the next step.

select(WASImRS:BPVSRS, mean.score, Participant, age.group)where I add the new recoded variable to the selection of variables I want to include in the new datasetconc.orth.subjs.

Notice that R handles categorical or nominal variables like Study (or, in other data, variables e.g. gender, education or ethnicity) as factors.

- Within a classification scheme like education, we may have different classes or categories or groups e.g. “further, higher, school”. We can code these different classes with numbers (e.g. \(school = 1\)) or with words “further, higher, school”. Whatever we use, the different classes or groups are referred to as levels and each level has a name.